一、pytorch finetuning 自己的圖片進行訓(xùn)練

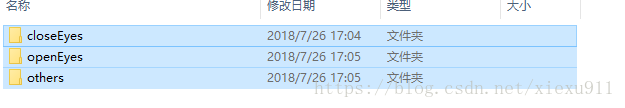

這種讀取圖片的方式用的是torch自帶的 ImageFolder,讀取的文件夾必須在一個大的子文件下,按類別歸好類。

就像我現(xiàn)在要區(qū)分三個類別。

#perpare data set

#train data

train_data=torchvision.datasets.ImageFolder("F:/eyeDataSet/trainData",transform=transforms.Compose(

[

transforms.Scale(256),

transforms.CenterCrop(224),

transforms.ToTensor()

]))

print(len(train_data))

train_loader=DataLoader(train_data,batch_size=20,shuffle=True)

然后就是fine tuning自己的網(wǎng)絡(luò),在torch中可以對整個網(wǎng)絡(luò)修改后,訓(xùn)練全部的參數(shù)也可以只訓(xùn)練其中的一部分,我這里就只訓(xùn)練最后一個全連接層。

torchvision中提供了很多常用的模型,比如resnet ,Vgg,Alexnet等等

# prepare model

mode1_ft_res18=torchvision.models.resnet18(pretrained=True)

for param in mode1_ft_res18.parameters():

param.requires_grad=False

num_fc=mode1_ft_res18.fc.in_features

mode1_ft_res18.fc=torch.nn.Linear(num_fc,3)

定義自己的優(yōu)化器,注意這里的參數(shù)只傳入最后一層的

#loss function and optimizer criterion=torch.nn.CrossEntropyLoss() #parameters only train the last fc layer optimizer=torch.optim.Adam(mode1_ft_res18.fc.parameters(),lr=0.001)

然后就可以開始訓(xùn)練了,定義好各種參數(shù)。

#start train

#label not one-hot encoder

EPOCH=1

for epoch in range(EPOCH):

train_loss=0.

train_acc=0.

for step,data in enumerate(train_loader):

batch_x,batch_y=data

batch_x,batch_y=Variable(batch_x),Variable(batch_y)

#batch_y not one hot

#out is the probability of eatch class

# such as one sample[-1.1009 0.1411 0.0320],need to calculate the max index

# out shape is batch_size * class

out=mode1_ft_res18(batch_x)

loss=criterion(out,batch_y)

train_loss+=loss.data[0]

# pred is the expect class

#batch_y is the true label

pred=torch.max(out,1)[1]

train_correct=(pred==batch_y).sum()

train_acc+=train_correct.data[0]

optimizer.zero_grad()

loss.backward()

optimizer.step()

if step%14==0:

print("Epoch: ",epoch,"Step",step,

"Train_loss: ",train_loss/((step+1)*20),"Train acc: ",train_acc/((step+1)*20))

測試部分和訓(xùn)練部分類似這里就不一一說明。

這樣就完整了對自己網(wǎng)絡(luò)的訓(xùn)練測試,完整代碼如下:

import torch

import numpy as np

import torchvision

from torchvision import transforms,utils

from torch.utils.data import DataLoader

from torch.autograd import Variable

#perpare data set

#train data

train_data=torchvision.datasets.ImageFolder("F:/eyeDataSet/trainData",transform=transforms.Compose(

[

transforms.Scale(256),

transforms.CenterCrop(224),

transforms.ToTensor()

]))

print(len(train_data))

train_loader=DataLoader(train_data,batch_size=20,shuffle=True)

#test data

test_data=torchvision.datasets.ImageFolder("F:/eyeDataSet/testData",transform=transforms.Compose(

[

transforms.Scale(256),

transforms.CenterCrop(224),

transforms.ToTensor()

]))

test_loader=DataLoader(test_data,batch_size=20,shuffle=True)

# prepare model

mode1_ft_res18=torchvision.models.resnet18(pretrained=True)

for param in mode1_ft_res18.parameters():

param.requires_grad=False

num_fc=mode1_ft_res18.fc.in_features

mode1_ft_res18.fc=torch.nn.Linear(num_fc,3)

#loss function and optimizer

criterion=torch.nn.CrossEntropyLoss()

#parameters only train the last fc layer

optimizer=torch.optim.Adam(mode1_ft_res18.fc.parameters(),lr=0.001)

#start train

#label not one-hot encoder

EPOCH=1

for epoch in range(EPOCH):

train_loss=0.

train_acc=0.

for step,data in enumerate(train_loader):

batch_x,batch_y=data

batch_x,batch_y=Variable(batch_x),Variable(batch_y)

#batch_y not one hot

#out is the probability of eatch class

# such as one sample[-1.1009 0.1411 0.0320],need to calculate the max index

# out shape is batch_size * class

out=mode1_ft_res18(batch_x)

loss=criterion(out,batch_y)

train_loss+=loss.data[0]

# pred is the expect class

#batch_y is the true label

pred=torch.max(out,1)[1]

train_correct=(pred==batch_y).sum()

train_acc+=train_correct.data[0]

optimizer.zero_grad()

loss.backward()

optimizer.step()

if step%14==0:

print("Epoch: ",epoch,"Step",step,

"Train_loss: ",train_loss/((step+1)*20),"Train acc: ",train_acc/((step+1)*20))

#print("Epoch: ", epoch, "Train_loss: ", train_loss / len(train_data), "Train acc: ", train_acc / len(train_data))

# test model

mode1_ft_res18.eval()

eval_loss=0

eval_acc=0

for step ,data in enumerate(test_loader):

batch_x,batch_y=data

batch_x,batch_y=Variable(batch_x),Variable(batch_y)

out=mode1_ft_res18(batch_x)

loss = criterion(out, batch_y)

eval_loss += loss.data[0]

# pred is the expect class

# batch_y is the true label

pred = torch.max(out, 1)[1]

test_correct = (pred == batch_y).sum()

eval_acc += test_correct.data[0]

optimizer.zero_grad()

loss.backward()

optimizer.step()

print( "Test_loss: ", eval_loss / len(test_data), "Test acc: ", eval_acc / len(test_data))

二、PyTorch 利用預(yù)訓(xùn)練模型進行Fine-tuning

在Deep Learning領(lǐng)域,很多子領(lǐng)域的應(yīng)用,比如一些動物識別,食物的識別等,公開的可用的數(shù)據(jù)庫相對于ImageNet等數(shù)據(jù)庫而言,其規(guī)模太小了,無法利用深度網(wǎng)絡(luò)模型直接train from scratch,容易引起過擬合,這時就需要把一些在大規(guī)模數(shù)據(jù)庫上已經(jīng)訓(xùn)練完成的模型拿過來,在目標數(shù)據(jù)庫上直接進行Fine-tuning(微調(diào)),這個已經(jīng)經(jīng)過訓(xùn)練的模型對于目標數(shù)據(jù)集而言,只是一種相對較好的參數(shù)初始化方法而已,尤其是大數(shù)據(jù)集與目標數(shù)據(jù)集結(jié)構(gòu)比較相似的話,經(jīng)過在目標數(shù)據(jù)集上微調(diào)能夠得到不錯的效果。

Fine-tune預(yù)訓(xùn)練網(wǎng)絡(luò)的步驟:

1. 首先更改預(yù)訓(xùn)練模型分類層全連接層的數(shù)目,因為一般目標數(shù)據(jù)集的類別數(shù)與大規(guī)模數(shù)據(jù)庫的類別數(shù)不一致,更改為目標數(shù)據(jù)集上訓(xùn)練集的類別數(shù)目即可,一致的話則無需更改;

2. 把分類器前的網(wǎng)絡(luò)的所有層的參數(shù)固定,即不讓它們參與學習,不進行反向傳播,只訓(xùn)練分類層的網(wǎng)絡(luò),這時學習率可以設(shè)置的大一點,如是原來初始學習率的10倍或幾倍或0.01等,這時候網(wǎng)絡(luò)訓(xùn)練的比較快,因為除了分類層,其它層不需要進行反向傳播,可以多嘗試不同的學習率設(shè)置。

3.接下來是設(shè)置相對較小的學習率,對整個網(wǎng)絡(luò)進行訓(xùn)練,這時網(wǎng)絡(luò)訓(xùn)練變慢啦。

下面對利用PyTorch深度學習框架Fine-tune預(yù)訓(xùn)練網(wǎng)絡(luò)的過程中涉及到的固定可學習參數(shù),對不同的層設(shè)置不同的學習率等進行詳細講解。

1. PyTorch對某些層固定網(wǎng)絡(luò)的可學習參數(shù)的方法:

class Net(nn.Module):

def __init__(self, num_classes=546):

super(Net, self).__init__()

self.features = nn.Sequential(

nn.Conv2d(1, 64, kernel_size=3, stride=2, padding=1),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

nn.Conv2d(64, 64, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

)

self.Conv1_1 = nn.Sequential(

nn.Conv2d(64, 64, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

nn.Conv2d(64, 64, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(64),

)

for p in self.parameters():

p.requires_grad=False

self.Conv1_2 = nn.Sequential(

nn.Conv2d(64, 64, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

nn.Conv2d(64, 64, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(64),

)

如上述代碼,則模型Net網(wǎng)絡(luò)中self.features與self.Conv1_1層中的參數(shù)便是固定,不可學習的。這主要看代碼:

for p in self.parameters():

p.requires_grad=False

插入的位置,這段代碼前的所有層的參數(shù)是不可學習的,也就沒有反向傳播過程。也可以指定某一層的參數(shù)不可學習,如下:

for p in self.features.parameters():

p.requires_grad=False

則 self.features層所有參數(shù)均是不可學習的。

注意,上述代碼設(shè)置若要真正生效,在訓(xùn)練網(wǎng)絡(luò)時需要在設(shè)置優(yōu)化器如下:

optimizer = torch.optim.SGD(filter(lambda p: p.requires_grad, model.parameters()), args.lr,

momentum=args.momentum,

weight_decay=args.weight_decay)

2. PyTorch之為不同的層設(shè)置不同的學習率

model = Net()

conv1_2_params = list(map(id, model.Conv1_2.parameters()))

base_params = filter(lambda p: id(p) not in conv1_2_params,

model.parameters())

optimizer = torch.optim.SGD([

{"params": base_params},

{"params": model.Conv1_2.parameters(), "lr": 10 * args.lr}], args.lr,

momentum=args.momentum, weight_decay=args.weight_decay)

上述代碼表示將模型Net網(wǎng)絡(luò)的 self.Conv1_2層的學習率設(shè)置為傳入學習率的10倍,base_params的學習沒有明確設(shè)置,則默認為傳入的學習率args.lr。

注意:

[{"params": base_params}, {"params": model.Conv1_2.parameters(), "lr": 10 * args.lr}]

表示為列表中的字典結(jié)構(gòu)。

這種方法設(shè)置不同的學習率顯得不夠靈活,可以為不同的層設(shè)置靈活的學習率,可以采用如下方法在adjust_learning_rate函數(shù)中設(shè)置:

def adjust_learning_rate(optimizer, epoch, args):

lre = []

lre.extend([0.01] * 10)

lre.extend([0.005] * 10)

lre.extend([0.0025] * 10)

lr = lre[epoch]

optimizer.param_groups[0]["lr"] = 0.9 * lr

optimizer.param_groups[1]["lr"] = 10 * lr

print(param_group[0]["lr"])

print(param_group[1]["lr"])

上述代碼中的optimizer.param_groups[0]就代表[{"params": base_params}, {"params": model.Conv1_2.parameters(), "lr": 10 * args.lr}]中的"params": base_params},optimizer.param_groups[1]代表{"params": model.Conv1_2.parameters(), "lr": 10 * args.lr},這里設(shè)置的學習率會把args.lr給覆蓋掉,個人認為上述代碼在設(shè)置學習率方面更靈活一些。上述代碼也可如下變成實現(xiàn)(注意學習率隨便設(shè)置的,未與上述代碼保持一致):

def adjust_learning_rate(optimizer, epoch, args):

lre = np.logspace(-2, -4, 40)

lr = lre[epoch]

for i in range(len(optimizer.param_groups)):

param_group = optimizer.param_groups[i]

if i == 0:

param_group["lr"] = 0.9 * lr

else:

param_group["lr"] = 10 * lr

print(param_group["lr"])

下面貼出SGD優(yōu)化器的PyTorch實現(xiàn),及其每個參數(shù)的設(shè)置和表示意義,具體如下:

import torch

from .optimizer import Optimizer, required

class SGD(Optimizer):

r"""Implements stochastic gradient descent (optionally with momentum).

Nesterov momentum is based on the formula from

`On the importance of initialization and momentum in deep learning`__.

Args:

params (iterable): iterable of parameters to optimize or dicts defining

parameter groups

lr (float): learning rate

momentum (float, optional): momentum factor (default: 0)

weight_decay (float, optional): weight decay (L2 penalty) (default: 0)

dampening (float, optional): dampening for momentum (default: 0)

nesterov (bool, optional): enables Nesterov momentum (default: False)

Example:

>>> optimizer = torch.optim.SGD(model.parameters(), lr=0.1, momentum=0.9)

>>> optimizer.zero_grad()

>>> loss_fn(model(input), target).backward()

>>> optimizer.step()

__ http://www.cs.toronto.edu/%7Ehinton/absps/momentum.pdf

.. note::

The implementation of SGD with Momentum/Nesterov subtly differs from

Sutskever et. al. and implementations in some other frameworks.

Considering the specific case of Momentum, the update can be written as

.. math::

v =

ho * v + g

p = p - lr * v

where p, g, v and :math:`

ho` denote the parameters, gradient,

velocity, and momentum respectively.

This is in contrast to Sutskever et. al. and

other frameworks which employ an update of the form

.. math::

v =

ho * v + lr * g

p = p - v

The Nesterov version is analogously modified.

"""

def __init__(self, params, lr=required, momentum=0, dampening=0,

weight_decay=0, nesterov=False):

if lr is not required and lr < 0.0:

raise ValueError("Invalid learning rate: {}".format(lr))

if momentum < 0.0:

raise ValueError("Invalid momentum value: {}".format(momentum))

if weight_decay < 0.0:

raise ValueError("Invalid weight_decay value: {}".format(weight_decay))

defaults = dict(lr=lr, momentum=momentum, dampening=dampening,

weight_decay=weight_decay, nesterov=nesterov)

if nesterov and (momentum <= 0 or dampening != 0):

raise ValueError("Nesterov momentum requires a momentum and zero dampening")

super(SGD, self).__init__(params, defaults)

def __setstate__(self, state):

super(SGD, self).__setstate__(state)

for group in self.param_groups:

group.setdefault("nesterov", False)

def step(self, closure=None):

"""Performs a single optimization step.

Arguments:

closure (callable, optional): A closure that reevaluates the model

and returns the loss.

"""

loss = None

if closure is not None:

loss = closure()

for group in self.param_groups:

weight_decay = group["weight_decay"]

momentum = group["momentum"]

dampening = group["dampening"]

nesterov = group["nesterov"]

for p in group["params"]:

if p.grad is None:

continue

d_p = p.grad.data

if weight_decay != 0:

d_p.add_(weight_decay, p.data)

if momentum != 0:

param_state = self.state[p]

if "momentum_buffer" not in param_state:

buf = param_state["momentum_buffer"] = torch.zeros_like(p.data)

buf.mul_(momentum).add_(d_p)

else:

buf = param_state["momentum_buffer"]

buf.mul_(momentum).add_(1 - dampening, d_p)

if nesterov:

d_p = d_p.add(momentum, buf)

else:

d_p = buf

p.data.add_(-group["lr"], d_p)

return loss

經(jīng)驗總結(jié):

在Fine-tuning時最好不要隔層設(shè)置層的參數(shù)的可學習與否,這樣做一般效果餅不理想,一般準則即可,即先Fine-tuning分類層,學習率設(shè)置的大一些,然后在將整個網(wǎng)絡(luò)設(shè)置一個較小的學習率,所有層一起訓(xùn)練。

至于不先經(jīng)過Fine-tune分類層,而是將整個網(wǎng)絡(luò)所有層一起訓(xùn)練,只是分類層的學習率相對設(shè)置大一些,這樣做也可以,至于哪個效果更好,沒評估過。當用三元組損失(triplet loss)微調(diào)用softmax loss訓(xùn)練的網(wǎng)絡(luò)時,可以設(shè)置階梯型的較小學習率,整個網(wǎng)絡(luò)所有層一起訓(xùn)練,效果比較好,而不用先Fine-tune分類層前一層的輸出。

以上為個人經(jīng)驗,希望能給大家一個參考,也希望大家多多支持服務(wù)器之家。

原文鏈接:https://blog.csdn.net/xiexu911/article/details/81227126