在利用torch.max函數(shù)和F.Ssoftmax函數(shù)時(shí),對(duì)應(yīng)該設(shè)置什么維度,總是有點(diǎn)懵,遂總結(jié)一下:

首先看看二維tensor的函數(shù)的例子:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

|

import torchimport torch.nn.functional as F input = torch.randn(3,4)print(input)tensor([[-0.5526, -0.0194, 2.1469, -0.2567], [-0.3337, -0.9229, 0.0376, -0.0801], [ 1.4721, 0.1181, -2.6214, 1.7721]]) b = F.softmax(input,dim=0) # 按列SoftMax,列和為1print(b)tensor([[0.1018, 0.3918, 0.8851, 0.1021], [0.1268, 0.1587, 0.1074, 0.1218], [0.7714, 0.4495, 0.0075, 0.7762]]) c = F.softmax(input,dim=1) # 按行SoftMax,行和為1print(c)tensor([[0.0529, 0.0901, 0.7860, 0.0710], [0.2329, 0.1292, 0.3377, 0.3002], [0.3810, 0.0984, 0.0064, 0.5143]]) d = torch.max(input,dim=0) # 按列取max,print(d)torch.return_types.max(values=tensor([1.4721, 0.1181, 2.1469, 1.7721]),indices=tensor([2, 2, 0, 2])) e = torch.max(input,dim=1) # 按行取max,print(e)torch.return_types.max(values=tensor([2.1469, 0.0376, 1.7721]),indices=tensor([2, 2, 3])) |

下面看看三維tensor解釋例子:

函數(shù)softmax輸出的是所給矩陣的概率分布;

b輸出的是在dim=0維上的概率分布,b[0][5][6]+b[1][5][6]+b[2][5][6]=1

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

|

a=torch.rand(3,16,20)b=F.softmax(a,dim=0)c=F.softmax(a,dim=1)d=F.softmax(a,dim=2) In [1]: import torch as tIn [2]: import torch.nn.functional as FIn [4]: a=t.Tensor(3,4,5)In [5]: b=F.softmax(a,dim=0)In [6]: c=F.softmax(a,dim=1)In [7]: d=F.softmax(a,dim=2) In [8]: aOut[8]: tensor([[[-0.1581, 0.0000, 0.0000, 0.0000, -0.0344], [ 0.0000, -0.0344, 0.0000, -0.0344, 0.0000], [-0.0344, 0.0000, -0.0344, 0.0000, -0.0344], [ 0.0000, -0.0344, 0.0000, -0.0344, 0.0000]], [[-0.0344, 0.0000, -0.0344, 0.0000, -0.0344], [ 0.0000, -0.0344, 0.0000, -0.0344, 0.0000], [-0.0344, 0.0000, -0.0344, 0.0000, -0.0344], [ 0.0000, -0.0344, 0.0000, -0.0344, 0.0000]], [[-0.0344, 0.0000, -0.0344, 0.0000, -0.0344], [ 0.0000, -0.0344, 0.0000, -0.0344, 0.0000], [-0.0344, 0.0000, -0.0344, 0.0000, -0.0344], [ 0.0000, -0.0344, 0.0000, -0.0344, 0.0000]]]) In [9]: bOut[9]: tensor([[[0.3064, 0.3333, 0.3410, 0.3333, 0.3333], [0.3333, 0.3333, 0.3333, 0.3333, 0.3333], [0.3333, 0.3333, 0.3333, 0.3333, 0.3333], [0.3333, 0.3333, 0.3333, 0.3333, 0.3333]], [[0.3468, 0.3333, 0.3295, 0.3333, 0.3333], [0.3333, 0.3333, 0.3333, 0.3333, 0.3333], [0.3333, 0.3333, 0.3333, 0.3333, 0.3333], [0.3333, 0.3333, 0.3333, 0.3333, 0.3333]], [[0.3468, 0.3333, 0.3295, 0.3333, 0.3333], [0.3333, 0.3333, 0.3333, 0.3333, 0.3333], [0.3333, 0.3333, 0.3333, 0.3333, 0.3333], [0.3333, 0.3333, 0.3333, 0.3333, 0.3333]]]) In [10]: b.sum()Out[10]: tensor(20.0000) In [11]: b[0][0][0]+b[1][0][0]+b[2][0][0]Out[11]: tensor(1.0000) In [12]: c.sum()Out[12]: tensor(15.) In [13]: cOut[13]: tensor([[[0.2235, 0.2543, 0.2521, 0.2543, 0.2457], [0.2618, 0.2457, 0.2521, 0.2457, 0.2543], [0.2529, 0.2543, 0.2436, 0.2543, 0.2457], [0.2618, 0.2457, 0.2521, 0.2457, 0.2543]], [[0.2457, 0.2543, 0.2457, 0.2543, 0.2457], [0.2543, 0.2457, 0.2543, 0.2457, 0.2543], [0.2457, 0.2543, 0.2457, 0.2543, 0.2457], [0.2543, 0.2457, 0.2543, 0.2457, 0.2543]], [[0.2457, 0.2543, 0.2457, 0.2543, 0.2457], [0.2543, 0.2457, 0.2543, 0.2457, 0.2543], [0.2457, 0.2543, 0.2457, 0.2543, 0.2457], [0.2543, 0.2457, 0.2543, 0.2457, 0.2543]]]) In [14]: n=t.rand(3,4) In [15]: nOut[15]: tensor([[0.2769, 0.3475, 0.8914, 0.6845], [0.9251, 0.3976, 0.8690, 0.4510], [0.8249, 0.1157, 0.3075, 0.3799]]) In [16]: m=t.argmax(n,dim=0) In [17]: mOut[17]: tensor([1, 1, 0, 0]) In [18]: p=t.argmax(n,dim=1) In [19]: pOut[19]: tensor([2, 0, 0]) In [20]: d.sum()Out[20]: tensor(12.0000) In [22]: dOut[22]: tensor([[[0.1771, 0.2075, 0.2075, 0.2075, 0.2005], [0.2027, 0.1959, 0.2027, 0.1959, 0.2027], [0.1972, 0.2041, 0.1972, 0.2041, 0.1972], [0.2027, 0.1959, 0.2027, 0.1959, 0.2027]], [[0.1972, 0.2041, 0.1972, 0.2041, 0.1972], [0.2027, 0.1959, 0.2027, 0.1959, 0.2027], [0.1972, 0.2041, 0.1972, 0.2041, 0.1972], [0.2027, 0.1959, 0.2027, 0.1959, 0.2027]], [[0.1972, 0.2041, 0.1972, 0.2041, 0.1972], [0.2027, 0.1959, 0.2027, 0.1959, 0.2027], [0.1972, 0.2041, 0.1972, 0.2041, 0.1972], [0.2027, 0.1959, 0.2027, 0.1959, 0.2027]]]) In [23]: d[0][0].sum()Out[23]: tensor(1.) |

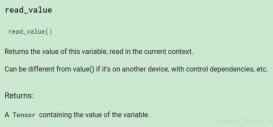

補(bǔ)充知識(shí):多分類問題torch.nn.Softmax的使用

為什么談?wù)撨@個(gè)問題呢?是因?yàn)槲以诠ぷ鞯倪^程中遇到了語(yǔ)義分割預(yù)測(cè)輸出特征圖個(gè)數(shù)為16,也就是所謂的16分類問題。

因?yàn)槊總€(gè)通道的像素的值的大小代表了像素屬于該通道的類的大小,為了在一張圖上用不同的顏色顯示出來(lái),我不得不學(xué)習(xí)了torch.nn.Softmax的使用。

首先看一個(gè)簡(jiǎn)答的例子,倘若輸出為(3, 4, 4),也就是3張4x4的特征圖。

|

1

2

3

|

import torchimg = torch.rand((3,4,4))print(img) |

輸出為:

|

1

2

3

4

5

6

7

8

9

10

11

12

|

tensor([[[0.0413, 0.8728, 0.8926, 0.0693], [0.4072, 0.0302, 0.9248, 0.6676], [0.4699, 0.9197, 0.3333, 0.4809], [0.3877, 0.7673, 0.6132, 0.5203]], [[0.4940, 0.7996, 0.5513, 0.8016], [0.1157, 0.8323, 0.9944, 0.2127], [0.3055, 0.4343, 0.8123, 0.3184], [0.8246, 0.6731, 0.3229, 0.1730]], [[0.0661, 0.1905, 0.4490, 0.7484], [0.4013, 0.1468, 0.2145, 0.8838], [0.0083, 0.5029, 0.0141, 0.8998], [0.8673, 0.2308, 0.8808, 0.0532]]]) |

我們可以看到共三張?zhí)卣鲌D,每張?zhí)卣鲌D上對(duì)應(yīng)的值越大,說明屬于該特征圖對(duì)應(yīng)類的概率越大。

|

1

2

3

4

|

import torch.nn as nnsogtmax = nn.Softmax(dim=0)img = sogtmax(img)print(img) |

輸出為:

|

1

2

3

4

5

6

7

8

9

10

11

12

|

tensor([[[0.2780, 0.4107, 0.4251, 0.1979], [0.3648, 0.2297, 0.3901, 0.3477], [0.4035, 0.4396, 0.2993, 0.2967], [0.2402, 0.4008, 0.3273, 0.4285]], [[0.4371, 0.3817, 0.3022, 0.4117], [0.2726, 0.5122, 0.4182, 0.2206], [0.3423, 0.2706, 0.4832, 0.2522], [0.3718, 0.3648, 0.2449, 0.3028]], [[0.2849, 0.2076, 0.2728, 0.3904], [0.3627, 0.2581, 0.1917, 0.4317], [0.2543, 0.2898, 0.2175, 0.4511], [0.3880, 0.2344, 0.4278, 0.2686]]]) |

可以看到,上面的代碼對(duì)每張?zhí)卣鲌D對(duì)應(yīng)位置的像素值進(jìn)行Softmax函數(shù)處理, 圖中標(biāo)紅位置加和=1,同理,標(biāo)藍(lán)位置加和=1。

我們看到Softmax函數(shù)會(huì)對(duì)原特征圖每個(gè)像素的值在對(duì)應(yīng)維度(這里dim=0,也就是第一維)上進(jìn)行計(jì)算,將其處理到0~1之間,并且大小固定不變。

print(torch.max(img,0))

輸出為:

|

1

2

3

4

5

6

7

8

9

|

torch.return_types.max(values=tensor([[0.4371, 0.4107, 0.4251, 0.4117], [0.3648, 0.5122, 0.4182, 0.4317], [0.4035, 0.4396, 0.4832, 0.4511], [0.3880, 0.4008, 0.4278, 0.4285]]),indices=tensor([[1, 0, 0, 1], [0, 1, 1, 2], [0, 0, 1, 2], [2, 0, 2, 0]])) |

可以看到這里3x4x4變成了1x4x4,而且對(duì)應(yīng)位置上的值為像素對(duì)應(yīng)每個(gè)通道上的最大值,并且indices是對(duì)應(yīng)的分類。

清楚理解了上面的流程,那么我們就容易處理了。

看具體案例,這里輸出output的大小為:16x416x416.

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

|

output = torch.tensor(output) sm = nn.Softmax(dim=0)output = sm(output) mask = torch.max(output,0).indices.numpy() # 因?yàn)橐D(zhuǎn)化為RGB彩色圖,所以增加一維rgb_img = np.zeros((output.shape[1], output.shape[2], 3))for i in range(len(mask)): for j in range(len(mask[0])): if mask[i][j] == 0: rgb_img[i][j][0] = 255 rgb_img[i][j][1] = 255 rgb_img[i][j][2] = 255 if mask[i][j] == 1: rgb_img[i][j][0] = 255 rgb_img[i][j][1] = 180 rgb_img[i][j][2] = 0 if mask[i][j] == 2: rgb_img[i][j][0] = 255 rgb_img[i][j][1] = 180 rgb_img[i][j][2] = 180 if mask[i][j] == 3: rgb_img[i][j][0] = 255 rgb_img[i][j][1] = 180 rgb_img[i][j][2] = 255 if mask[i][j] == 4: rgb_img[i][j][0] = 255 rgb_img[i][j][1] = 255 rgb_img[i][j][2] = 180 if mask[i][j] == 5: rgb_img[i][j][0] = 255 rgb_img[i][j][1] = 255 rgb_img[i][j][2] = 0 if mask[i][j] == 6: rgb_img[i][j][0] = 255 rgb_img[i][j][1] = 0 rgb_img[i][j][2] = 180 if mask[i][j] == 7: rgb_img[i][j][0] = 255 rgb_img[i][j][1] = 0 rgb_img[i][j][2] = 255 if mask[i][j] == 8: rgb_img[i][j][0] = 255 rgb_img[i][j][1] = 0 rgb_img[i][j][2] = 0 if mask[i][j] == 9: rgb_img[i][j][0] = 180 rgb_img[i][j][1] = 0 rgb_img[i][j][2] = 0 if mask[i][j] == 10: rgb_img[i][j][0] = 180 rgb_img[i][j][1] = 255 rgb_img[i][j][2] = 255 if mask[i][j] == 11: rgb_img[i][j][0] = 180 rgb_img[i][j][1] = 0 rgb_img[i][j][2] = 180 if mask[i][j] == 12: rgb_img[i][j][0] = 180 rgb_img[i][j][1] = 0 rgb_img[i][j][2] = 255 if mask[i][j] == 13: rgb_img[i][j][0] = 180 rgb_img[i][j][1] = 255 rgb_img[i][j][2] = 180 if mask[i][j] == 14: rgb_img[i][j][0] = 0 rgb_img[i][j][1] = 180 rgb_img[i][j][2] = 255 if mask[i][j] == 15: rgb_img[i][j][0] = 0 rgb_img[i][j][1] = 0 rgb_img[i][j][2] = 0 cv2.imwrite('output.jpg', rgb_img) |

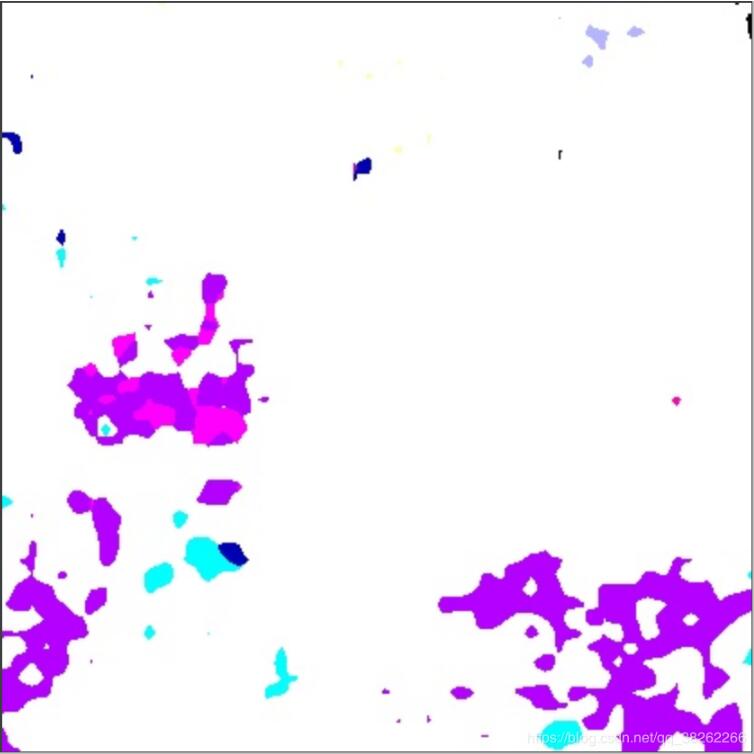

最后保存得到的圖為:

以上這篇淺談pytorch中torch.max和F.softmax函數(shù)的維度解釋就是小編分享給大家的全部?jī)?nèi)容了,希望能給大家一個(gè)參考,也希望大家多多支持服務(wù)器之家。

原文鏈接:https://blog.csdn.net/Jasminexjf/article/details/90402990