對shuffle=true的理解:

之前不了解shuffle的實際效果,假設有數據a,b,c,d,不知道batch_size=2后打亂,具體是如下哪一種情況:

1.先按順序取batch,對batch內打亂,即先取a,b,a,b進行打亂;

2.先打亂,再取batch。

證明是第二種

|

1

2

3

4

|

shuffle (bool, optional): set to ``true`` to have the data reshuffled at every epoch (default: ``false``).if shuffle: sampler = randomsampler(dataset) #此時得到的是索引 |

補充:簡單測試一下pytorch dataloader里的shuffle=true是如何工作的

看代碼吧~

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

|

import sysimport torchimport randomimport argparseimport numpy as npimport pandas as pdimport torch.nn as nnfrom torch.nn import functional as ffrom torch.optim import lr_schedulerfrom torchvision import datasets, transformsfrom torch.utils.data import tensordataset, dataloader, dataset class dealdataset(dataset): def __init__(self): xy = np.loadtxt(open('./iris.csv','rb'), delimiter=',', dtype=np.float32) #data = pd.read_csv("iris.csv",header=none) #xy = data.values self.x_data = torch.from_numpy(xy[:, 0:-1]) self.y_data = torch.from_numpy(xy[:, [-1]]) self.len = xy.shape[0] def __getitem__(self, index): return self.x_data[index], self.y_data[index] def __len__(self): return self.len dealdataset = dealdataset() train_loader2 = dataloader(dataset=dealdataset, batch_size=2, shuffle=true)#print(dealdataset.x_data)for i, data in enumerate(train_loader2): inputs, labels = data #inputs, labels = variable(inputs), variable(labels) print(inputs) #print("epoch:", epoch, "的第" , i, "個inputs", inputs.data.size(), "labels", labels.data.size()) |

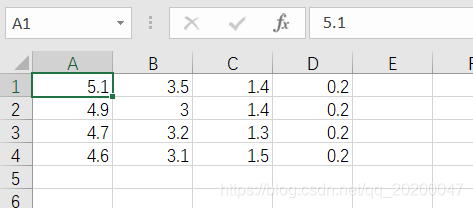

簡易數據集

![]()

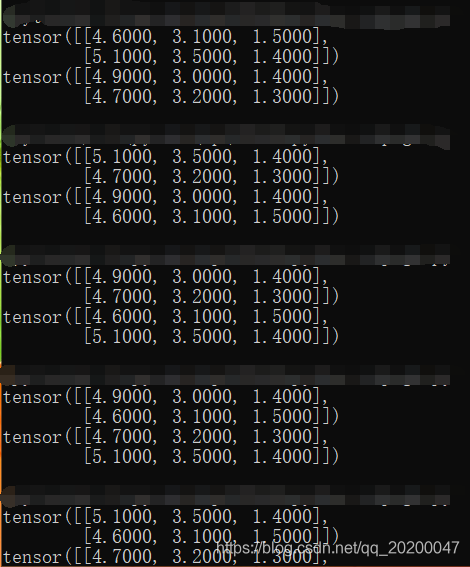

shuffle之后的結果,每次都是隨機打亂,然后分成大小為n的若干個mini-batch.

以上為個人經驗,希望能給大家一個參考,也希望大家多多支持服務器之家。

原文鏈接:https://blog.csdn.net/qq_35248792/article/details/109510917