介紹

這是一個基于深度學習的垃圾分類小工程,用深度殘差網絡構建

軟件架構

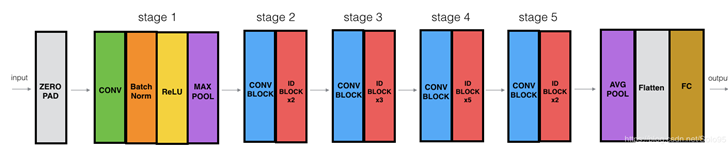

- 使用深度殘差網絡resnet50作為基石,在后續添加需要的層以適應不同的分類任務

- 模型的訓練需要用生成器將數據集循環寫入內存,同時圖像增強以泛化模型

- 使用不包含網絡輸出部分的resnet50權重文件進行遷移學習,只訓練我們在5個stage后增加的層

安裝教程

- 需要的第三方庫主要有tensorflow1.x,keras,opencv,pillow,scikit-learn,numpy

- 安裝方式很簡單,打開terminal,例如:pip install numpy -i https://pypi.tuna.tsinghua.edu.cn/simple

- 數據集與權重文件比較大,所以沒有上傳

- 如果環境配置方面有問題或者需要數據集與模型權重文件,可以在評論區說明您的問題,我將遠程幫助您

使用說明

- 文件夾theory記錄了我在本次深度學習中收獲的筆記,與模型訓練的控制臺打印信息

- 遷移學習需要的初始權重與模型定義文件resnet50.py放在model

- 下訓練運行trainnet.py,訓練結束會創建models文件夾,并將結果權重garclass.h5寫入該文件夾

- datagen文件夾下的genit.py用于進行圖像預處理以及數據生成器接口

- 使用訓練好的模型進行垃圾分類,運行demo.py

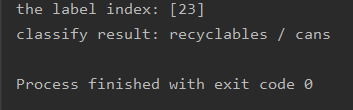

結果演示

cans易拉罐

代碼解釋

在實際的模型中,我們只使用了resnet50的5個stage,后面的輸出部分需要我們自己定制,網絡的結構圖如下:

stage5后我們的定制網絡如下:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

|

"""定制resnet后面的層"""def custom(input_size,num_classes,pretrain): # 引入初始化resnet50模型 base_model = ResNet50(weights=pretrain, include_top=False, pooling=None, input_shape=(input_size,input_size, 3), classes=num_classes) #由于有預權重,前部分凍結,后面進行遷移學習 for layer in base_model.layers: layer.trainable = False #添加后面的層 x = base_model.output x = layers.GlobalAveragePooling2D(name='avg_pool')(x) x = layers.Dropout(0.5,name='dropout1')(x) #regularizers正則化層,正則化器允許在優化過程中對層的參數或層的激活情況進行懲罰 #對損失函數進行最小化的同時,也需要讓對參數添加限制,這個限制也就是正則化懲罰項,使用l2范數 x = layers.Dense(512,activation='relu',kernel_regularizer= regularizers.l2(0.0001),name='fc2')(x) x = layers.BatchNormalization(name='bn_fc_01')(x) x = layers.Dropout(0.5,name='dropout2')(x) #40個分類 x = layers.Dense(num_classes,activation='softmax')(x) model = Model(inputs=base_model.input,outputs=x) #模型編譯 model.compile(optimizer="adam",loss = 'categorical_crossentropy',metrics=['accuracy']) return model |

網絡的訓練是遷移學習過程,使用已有的初始resnet50權重(5個stage已經訓練過,卷積層已經能夠提取特征),我們只訓練后面的全連接層部分,4個epoch后再對較后面的層進行訓練微調一下,獲得更高準確率,訓練過程如下:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

|

class Net(): def __init__(self,img_size,gar_num,data_dir,batch_size,pretrain): self.img_size=img_size self.gar_num=gar_num self.data_dir=data_dir self.batch_size=batch_size self.pretrain=pretrain def build_train(self): """遷移學習""" model = resnet.custom(self.img_size, self.gar_num, self.pretrain) model.summary() train_sequence, validation_sequence = genit.gendata(self.data_dir, self.batch_size, self.gar_num, self.img_size) epochs=4 model.fit_generator(train_sequence,steps_per_epoch=len(train_sequence),epochs=epochs,verbose=1,validation_data=validation_sequence, max_queue_size=10,shuffle=True) #微調,在實際工程中,激活函數也被算進層里,所以總共181層,微調是為了重新訓練部分卷積層,同時訓練最后的全連接層 layers=149 learning_rate=1e-4 for layer in model.layers[:layers]: layer.trainable = False for layer in model.layers[layers:]: layer.trainable = True Adam =adam(lr=learning_rate, decay=0.0005) model.compile(optimizer=Adam, loss='categorical_crossentropy', metrics=['accuracy']) model.fit_generator(train_sequence,steps_per_epoch=len(train_sequence),epochs=epochs * 2,verbose=1, callbacks=[ callbacks.ModelCheckpoint('./models/garclass.h5',monitor='val_loss', save_best_only=True, mode='min'), callbacks.ReduceLROnPlateau(monitor='val_loss', factor=0.1,patience=10, mode='min'), callbacks.EarlyStopping(monitor='val_loss', patience=10),], validation_data=validation_sequence,max_queue_size=10,shuffle=True) print('finish train,look for garclass.h5') |

訓練結果如下:

"""

loss: 0.7949 - acc: 0.9494 - val_loss: 0.9900 - val_acc: 0.8797

訓練用了9小時左右

"""

如果使用更好的顯卡,可以更快完成訓練

最后

希望大家可以體驗到深度學習帶來的收獲,能和大家學習很開心,更多關于深度學習的資料請關注服務器之家其它相關文章!

原文鏈接:https://blog.csdn.net/qq_40943760/article/details/106190943