心血來潮寫了個多線程抓妹子圖,雖然代碼還是有一些瑕疵,但是還是記錄下來,分享給大家。

Pic_downloader.py

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

|

# -*- coding: utf-8 -*-"""Created on Fri Aug 07 17:30:58 2015 @author: Dreace"""import urllib2import sysimport timeimport osimport randomfrom multiprocessing.dummy import Pool as ThreadPool type_ = sys.getfilesystemencoding()def rename(): return time.strftime("%Y%m%d%H%M%S")def rename_2(name): if len(name) == 2: name = '0' + name + '.jpg' elif len(name) == 1: name = '00' + name + '.jpg' else: name = name + '.jpg' return namedef download_pic(i): global count global time_out if Filter(i): try: content = urllib2.urlopen(i,timeout = time_out) url_content = content.read() f = open(repr(random.randint(10000,999999999)) + "_" + rename_2(repr(count)),"wb") f.write(url_content) f.close() count += 1 except Exception, e: print i + "下載超時,跳過!".decode("utf-8").encode(type_)def Filter(content): for line in Filter_list: line=line.strip('\n') if content.find(line) == -1: return Truedef get_pic(url_address): global pic_list try: str_ = urllib2.urlopen(url_address, timeout = time_out).read() url_content = str_.split("\"") for i in url_content: if i.find(".jpg") != -1: pic_list.append(i) except Exception, e: print "獲取圖片超時,跳過!".decode("utf-8").encode(type_)MAX = 2count = 0time_out = 60thread_num = 30pic_list = []page_list = []Filter_list = ["imgsize.ph.126.net","img.ph.126.net","img2.ph.126.net"]dir_name = "C:\Photos\\"+rename()os.makedirs(dir_name)os.chdir(dir_name)start_time = time.time()url_address = "http://sexy.faceks.com/?page="for i in range(1,MAX + 1): page_list.append(url_address + repr(i))page_pool = ThreadPool(thread_num)page_pool.map(get_pic,page_list)print "獲取到".decode("utf-8").encode(type_),len(pic_list),"張圖片,開始下載!".decode("utf-8").encode(type_)pool = ThreadPool(thread_num) pool.map(download_pic,pic_list)pool.close() pool.join()print count,"張圖片保存在".decode("utf-8").encode(type_) + dir_nameprint "共耗時".decode("utf-8").encode(type_),time.time() - start_time,"s" |

我們來看下一個網(wǎng)友的作品

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

|

#coding: utf-8 ############################################################## File Name: main.py# Author: mylonly# mail: mylonly@gmail.com# Created Time: Wed 11 Jun 2014 08:22:12 PM CST##########################################################################!/usr/bin/pythonimport re,urllib2,HTMLParser,threading,Queue,time#各圖集入口鏈接htmlDoorList = []#包含圖片的Hmtl鏈接htmlUrlList = []#圖片Url鏈接QueueimageUrlList = Queue.Queue(0)#捕獲圖片數(shù)量imageGetCount = 0#已下載圖片數(shù)量imageDownloadCount = 0#每個圖集的起始地址,用于判斷終止nextHtmlUrl = ''#本地保存路徑localSavePath = '/data/1920x1080/'#如果你想下你需要的分辨率的,請修改replace_str,有如下分辨率可供選擇1920x1200,1980x1920,1680x1050,1600x900,1440x900,1366x768,1280x1024,1024x768,1280x800replace_str = '1920x1080'replaced_str = '960x600'#內(nèi)頁分析處理類class ImageHtmlParser(HTMLParser.HTMLParser):def __init__(self):self.nextUrl = ''HTMLParser.HTMLParser.__init__(self)def handle_starttag(self,tag,attrs):global imageUrlListif(tag == 'img' and len(attrs) > 2 ):if(attrs[0] == ('id','bigImg')):url = attrs[1][1]url = url.replace(replaced_str,replace_str)imageUrlList.put(url)global imageGetCountimageGetCount = imageGetCount + 1print urlelif(tag == 'a' and len(attrs) == 4):if(attrs[0] == ('id','pageNext') and attrs[1] == ('class','next')):global nextHtmlUrlnextHtmlUrl = attrs[2][1];#首頁分析類class IndexHtmlParser(HTMLParser.HTMLParser):def __init__(self):self.urlList = []self.index = 0self.nextUrl = ''self.tagList = ['li','a']self.classList = ['photo-list-padding','pic']HTMLParser.HTMLParser.__init__(self)def handle_starttag(self,tag,attrs):if(tag == self.tagList[self.index]):for attr in attrs:if (attr[1] == self.classList[self.index]):if(self.index == 0):#第一層找到了self.index = 1else:#第二層找到了self.index = 0print attrs[1][1]self.urlList.append(attrs[1][1])breakelif(tag == 'a'):for attr in attrs:if (attr[0] == 'id' and attr[1] == 'pageNext'):self.nextUrl = attrs[1][1]print 'nextUrl:',self.nextUrlbreak#首頁Hmtl解析器indexParser = IndexHtmlParser()#內(nèi)頁Html解析器imageParser = ImageHtmlParser()#根據(jù)首頁得到所有入口鏈接print '開始掃描首頁...'host = 'http://desk.zol.com.cn'indexUrl = '/meinv/'while (indexUrl != ''):print '正在抓取網(wǎng)頁:',host+indexUrlrequest = urllib2.Request(host+indexUrl)try:m = urllib2.urlopen(request)con = m.read()indexParser.feed(con)if (indexUrl == indexParser.nextUrl):breakelse:indexUrl = indexParser.nextUrlexcept urllib2.URLError,e:print e.reasonprint '首頁掃描完成,所有圖集鏈接已獲得:'htmlDoorList = indexParser.urlList#根據(jù)入口鏈接得到所有圖片的urlclass getImageUrl(threading.Thread):def __init__(self):threading.Thread.__init__(self)def run(self):for door in htmlDoorList:print '開始獲取圖片地址,入口地址為:',doorglobal nextHtmlUrlnextHtmlUrl = ''while(door != ''):print '開始從網(wǎng)頁%s獲取圖片...'% (host+door)if(nextHtmlUrl != ''):request = urllib2.Request(host+nextHtmlUrl)else:request = urllib2.Request(host+door)try:m = urllib2.urlopen(request)con = m.read()imageParser.feed(con)print '下一個頁面地址為:',nextHtmlUrlif(door == nextHtmlUrl):breakexcept urllib2.URLError,e:print e.reasonprint '所有圖片地址均已獲得:',imageUrlListclass getImage(threading.Thread):def __init__(self):threading.Thread.__init__(self)def run(self):global imageUrlListprint '開始下載圖片...'while(True):print '目前捕獲圖片數(shù)量:',imageGetCountprint '已下載圖片數(shù)量:',imageDownloadCountimage = imageUrlList.get()print '下載文件路徑:',imagetry:cont = urllib2.urlopen(image).read()patter = '[0-9]*\.jpg';match = re.search(patter,image);if match:print '正在下載文件:',match.group()filename = localSavePath+match.group()f = open(filename,'wb')f.write(cont)f.close()global imageDownloadCountimageDownloadCount = imageDownloadCount + 1else:print 'no match'if(imageUrlList.empty()):breakexcept urllib2.URLError,e:print e.reasonprint '文件全部下載完成...'get = getImageUrl()get.start()print '獲取圖片鏈接線程啟動:'time.sleep(2)download = getImage()download.start()print '下載圖片鏈接線程啟動:' |

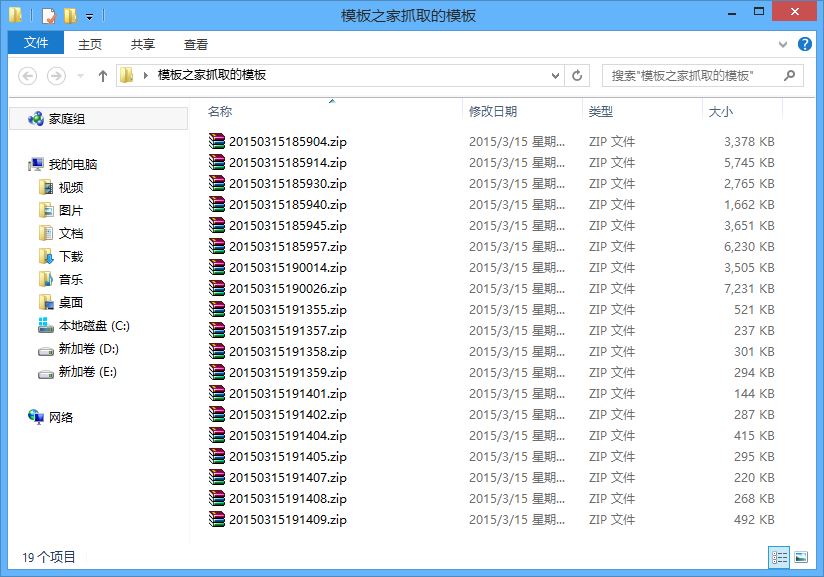

批量抓取指定網(wǎng)頁上的所有圖片

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

|

# -*- coding:utf-8 -*-# coding=UTF-8 import os,urllib,urllib2,re url = u"http://image.baidu.com/search/index?tn=baiduimage&ipn=r&ct=201326592&cl=2&lm=-1&st=-1&fm=index&fr=&sf=1&fmq=&pv=&ic=0&nc=1&z=&se=1&showtab=0&fb=0&width=&height=&face=0&istype=2&ie=utf-8&word=python&oq=python&rsp=-1"outpath = "t:\\" def getHtml(url): webfile = urllib.urlopen(url) outhtml = webfile.read() print outhtml return outhtml def getImageList(html): restr=ur'(' restr+=ur'http:\/\/[^\s,"]*\.jpg' restr+=ur'|http:\/\/[^\s,"]*\.jpeg' restr+=ur'|http:\/\/[^\s,"]*\.png' restr+=ur'|http:\/\/[^\s,"]*\.gif' restr+=ur'|http:\/\/[^\s,"]*\.bmp' restr+=ur'|https:\/\/[^\s,"]*\.jpeg' restr+=ur'|https:\/\/[^\s,"]*\.jpeg' restr+=ur'|https:\/\/[^\s,"]*\.png' restr+=ur'|https:\/\/[^\s,"]*\.gif' restr+=ur'|https:\/\/[^\s,"]*\.bmp' restr+=ur')' htmlurl = re.compile(restr) imgList = re.findall(htmlurl,html) print imgList return imgList def download(imgList, page): x = 1 for imgurl in imgList: filepathname=str(outpath+'pic_%09d_%010d'%(page,x)+str(os.path.splitext(urllib2.unquote(imgurl).decode('utf8').split('/')[-1])[1])).lower() print '[Debug] Download file :'+ imgurl+' >> '+filepathname urllib.urlretrieve(imgurl,filepathname) x+=1 def downImageNum(pagenum): page = 1 pageNumber = pagenum while(page <= pageNumber): html = getHtml(url)#獲得url指向的html內(nèi)容 imageList = getImageList(html)#獲得所有圖片的地址,返回列表 download(imageList,page)#下載所有的圖片 page = page+1 if __name__ == '__main__': downImageNum(1) |

以上就是給大家匯總的3款Python實現(xiàn)的批量抓取妹紙圖片的代碼了,希望對大家學(xué)習(xí)Python爬蟲能夠有所幫助。