使用urllib2,太強大了

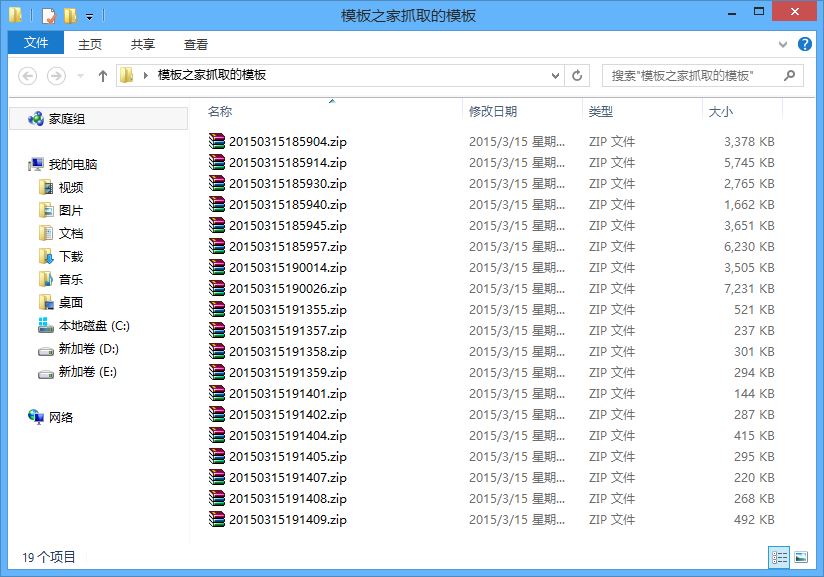

試了下用代理登陸拉取cookie,跳轉抓圖片......

文檔:http://docs.python.org/library/urllib2.html

直接上demo代碼了

包括:直接拉取,使用Reuqest(post/get),使用代理,cookie,跳轉處理

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

|

#!/usr/bin/python# -*- coding:utf-8 -*-# urllib2_test.py# author: wklken# 2012-03-17 wklken@yeah.netimport urllib,urllib2,cookielib,socketurl = "http://www.testurl....." #change yourself#最簡單方式def use_urllib2(): try: f = urllib2.urlopen(url, timeout=5).read() except urllib2.URLError, e: print e.reason print len(f)#使用Requestdef get_request(): #可以設置超時 socket.setdefaulttimeout(5) #可以加入參數 [無參數,使用get,以下這種方式,使用post] params = {"wd":"a","b":"2"} #可以加入請求頭信息,以便識別 i_headers = {"User-Agent": "Mozilla/5.0 (Windows; U; Windows NT 5.1; zh-CN; rv:1.9.1) Gecko/20090624 Firefox/3.5", "Accept": "text/plain"} #use post,have some params post to server,if not support ,will throw exception #req = urllib2.Request(url, data=urllib.urlencode(params), headers=i_headers) req = urllib2.Request(url, headers=i_headers) #創建request后,還可以進行其他添加,若是key重復,后者生效 #request.add_header('Accept','application/json') #可以指定提交方式 #request.get_method = lambda: 'PUT' try: page = urllib2.urlopen(req) print len(page.read()) #like get #url_params = urllib.urlencode({"a":"1", "b":"2"}) #final_url = url + "?" + url_params #print final_url #data = urllib2.urlopen(final_url).read() #print "Method:get ", len(data) except urllib2.HTTPError, e: print "Error Code:", e.code except urllib2.URLError, e: print "Error Reason:", e.reasondef use_proxy(): enable_proxy = False proxy_handler = urllib2.ProxyHandler({"http":"http://proxyurlXXXX.com:8080"}) null_proxy_handler = urllib2.ProxyHandler({}) if enable_proxy: opener = urllib2.build_opener(proxy_handler, urllib2.HTTPHandler) else: opener = urllib2.build_opener(null_proxy_handler, urllib2.HTTPHandler) #此句設置urllib2的全局opener urllib2.install_opener(opener) content = urllib2.urlopen(url).read() print "proxy len:",len(content)class NoExceptionCookieProcesser(urllib2.HTTPCookieProcessor): def http_error_403(self, req, fp, code, msg, hdrs): return fp def http_error_400(self, req, fp, code, msg, hdrs): return fp def http_error_500(self, req, fp, code, msg, hdrs): return fpdef hand_cookie(): cookie = cookielib.CookieJar() #cookie_handler = urllib2.HTTPCookieProcessor(cookie) #after add error exception handler cookie_handler = NoExceptionCookieProcesser(cookie) opener = urllib2.build_opener(cookie_handler, urllib2.HTTPHandler) url_login = "https://www.yourwebsite/?login" params = {"username":"user","password":"111111"} opener.open(url_login, urllib.urlencode(params)) for item in cookie: print item.name,item.value #urllib2.install_opener(opener) #content = urllib2.urlopen(url).read() #print len(content)#得到重定向 N 次以后最后頁面URLdef get_request_direct(): import httplib httplib.HTTPConnection.debuglevel = 1 request = urllib2.Request("http://www.google.com") request.add_header("Accept", "text/html,*/*") request.add_header("Connection", "Keep-Alive") opener = urllib2.build_opener() f = opener.open(request) print f.url print f.headers.dict print len(f.read())if __name__ == "__main__": use_urllib2() get_request() get_request_direct() use_proxy() hand_cookie() |