1.JavaScript 加密什么的最討厭了 :-(

1).eval 一個不依賴外部變量的函數(shù)立即調用很天真,看我 nodejs 來干掉你!

2).HTTP 請求的驗證首先嘗試 Referer,「小甜餅」沒有想像中的那么重要。

3).curl 和各命令行工具處理起文本很順手呢

4).但是 Python 也沒多幾行呢

2.Requests 效率比 lxml 自己那個好太多

3.progressbar 太先進了,我還是自個兒寫吧……

4.argparse 寫 Python 命令行程序必備啊~

5.string.Template也很好用哦

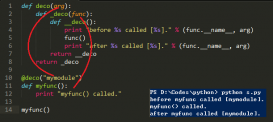

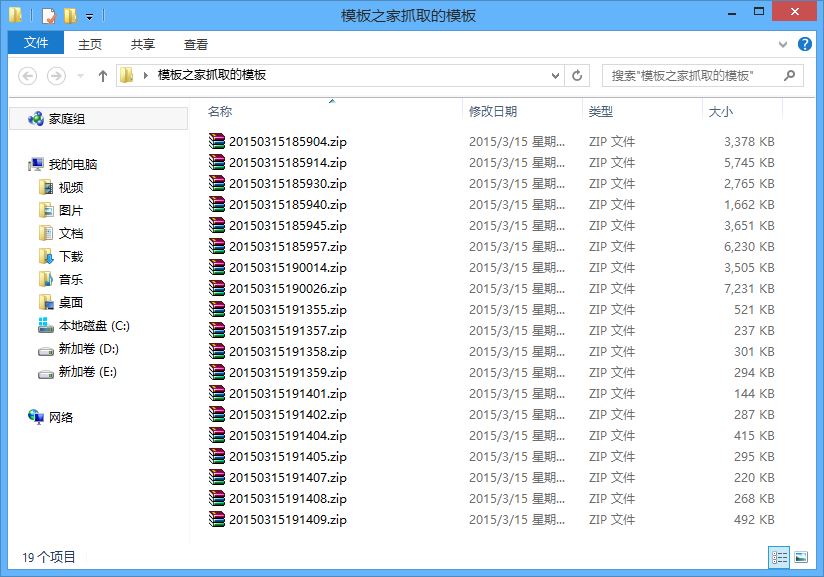

6.以下是主代碼啦,除了標準庫以及 lxml 和 requests,沒有的模塊都在無所不能的 winterpy 倉庫里。其實主代碼也在的。

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

|

#!/usr/bin/env python3# vim:fileencoding=utf-8import sysfrom functools import partialfrom string import Templateimport argparseimport base64from urllib.parse import unquotefrom lxml.html import fromstringimport requestsfrom htmlutils import extractTextfrom termutils import foreachsession = requests.Session()def main(index, filename='$name-$author.txt', start=0): r = session.get(index) r.encoding = 'gb18030' doc = fromstring(r.text, base_url=index) doc.make_links_absolute() name = doc.xpath('//div[@class="info"]/p[1]/a/text()')[0] author = doc.xpath('//div[@class="info"]/p[1]/span/text()')[0].split()[-1] nametmpl = Template(filename) fname = nametmpl.substitute(name=name, author=author) with open(fname, 'w') as f: sys.stderr.write('下載到文件 %s。\n' % fname) links = doc.xpath('//div[@class="chapterlist"]/ul/li/a') try: foreach(links, partial(gather_content, f.write), start=start) except KeyboardInterrupt: sys.stderr.write('\n') sys.exit(130) sys.stderr.write('\n') return Truedef gather_content(write, i, l): # curl -XPOST -F bookid=2747 -F chapterid=2098547 'http://www.feisuzw.com/skin/hongxiu/include/fe1sushow.php' # --referer http://www.feisuzw.com/Html/2747/2098547.html # tail +4 # base64 -d # sed 's/&#&/u/g' # ascii2uni -qaF # ascii2uni -qaJ # <p> paragraphs url = l.get('href') _, _, _, _, bookid, chapterid = url.split('/') chapterid = chapterid.split('.', 1)[0] r = session.post('http://www.feisuzw.com/skin/hongxiu/include/fe1sushow.php', data={ 'bookid': bookid, 'chapterid': chapterid, }, headers={'Referer': url}) text = r.content[3:] # strip BOM text = base64.decodebytes(text).replace(b'&#&', br'\u') text = text.decode('unicode_escape') text = unquote(text) text = text.replace('<p>', '').replace('</p>', '\n\n') title = l.text write(title) write('\n\n') write(text) write('\n') return titleif __name__ == '__main__': parser = argparse.ArgumentParser(description='下載飛速中文網(wǎng)小說') parser.add_argument('url', help='小說首頁鏈接') parser.add_argument('name', default='$name-$author.txt', nargs='?', help='保存文件名模板(支持 $name 和 $author') parser.add_argument('-s', '--start', default=1, type=int, metavar='N', help='下載起始頁位置(以 1 開始)') args = parser.parse_args() main(args.url, args.name, args.start-1) |