概述

從今天開始我們將開啟一段自然語言處理 (NLP) 的旅程. 自然語言處理可以讓來處理, 理解, 以及運用人類的語言, 實現機器語言和人類語言之間的溝通橋梁.

命名實例

命名實例 (Named Entity) 指的是 NLP 任務中具有特定意義的實體, 包括人名, 地名, 機構名, 專有名詞等. 舉個例子:

- Luke Rawlence 代表人物

- Aiimi 和 University of Lincoln 代表組織

- Milton Keynes 代表地方

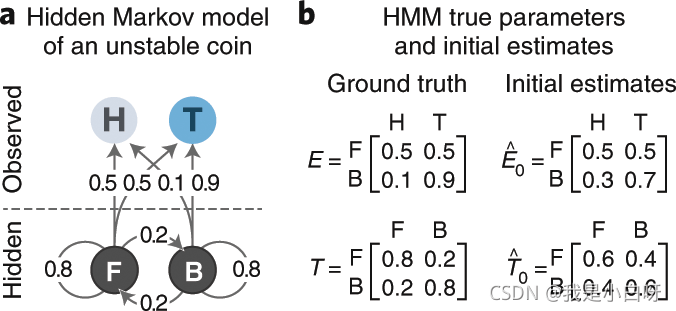

HMM

隱馬可夫模型 (Hidden Markov Model) 可以描述一個含有隱含未知參數的馬爾可夫過程. 如圖:

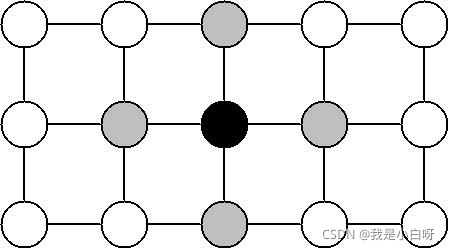

隨機場

隨機場 (Random Field) 包含兩個要素: 位置 (Site) 和相空間 (Phase Space). 當給每一個位置中按照某種分布隨機賦予空間的一個值后, 其全體就叫做隨機場. 舉個例子, 位置好比是一畝畝農田, 相空間好比是各種莊稼. 我們可以給不同的地種上不同的莊稼. 這就好比給隨機場的每個 “位置”, 賦予空間里不同的值. 隨機場就是在哪塊地里中什么莊稼.

馬爾科夫隨機場

馬爾科夫隨機場 (Markov Random Field) 是一種特殊的隨機場. 任何一塊地里的莊稼的種類僅與它鄰近的地里中的莊稼的種類有關. 那么這種集合就是一個馬爾科夫隨機場.

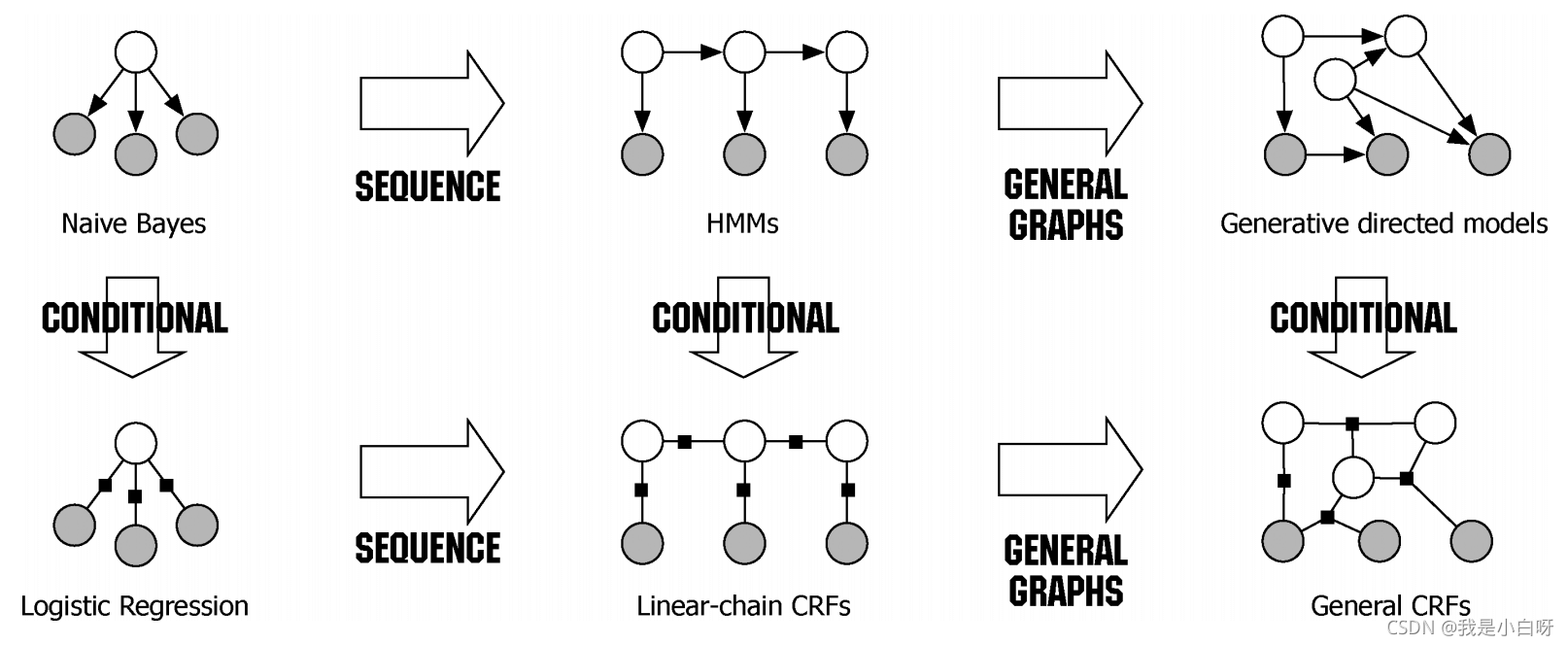

CRF

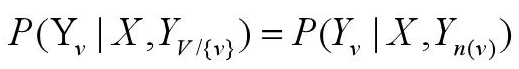

條件隨機場 (Conditional Random Field) 是給定隨機變量 X 條件下, 隨機變量 Y 的馬爾科夫隨機場. CRF 是在給定一組變量的情況下, 求解另一組變量的條件概率的模型, 常用于序列標注問題.

公式如下:

命名實例實戰

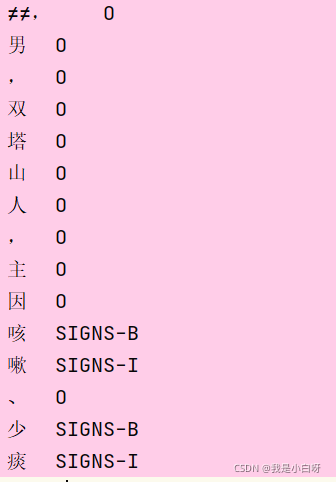

數據集

我們將會用到的是一個醫療命名的數據集, 內容如下:

crf

import tensorflow as tf

import tensorflow.keras.backend as K

import tensorflow.keras.layers as L

from tensorflow_addons.text import crf_log_likelihood, crf_decode

class CRF(L.Layer):

def __init__(self,

output_dim,

sparse_target=True,

**kwargs):

"""

Args:

output_dim (int): the number of labels to tag each temporal input.

sparse_target (bool): whether the the ground-truth label represented in one-hot.

Input shape:

(batch_size, sentence length, output_dim)

Output shape:

(batch_size, sentence length, output_dim)

"""

super(CRF, self).__init__(**kwargs)

self.output_dim = int(output_dim)

self.sparse_target = sparse_target

self.input_spec = L.InputSpec(min_ndim=3)

self.supports_masking = False

self.sequence_lengths = None

self.transitions = None

def build(self, input_shape):

assert len(input_shape) == 3

f_shape = tf.TensorShape(input_shape)

input_spec = L.InputSpec(min_ndim=3, axes={-1: f_shape[-1]})

if f_shape[-1] is None:

raise ValueError('The last dimension of the inputs to `CRF` '

'should be defined. Found `None`.')

if f_shape[-1] != self.output_dim:

raise ValueError('The last dimension of the input shape must be equal to output'

' shape. Use a linear layer if needed.')

self.input_spec = input_spec

self.transitions = self.add_weight(name='transitions',

shape=[self.output_dim, self.output_dim],

initializer='glorot_uniform',

trainable=True)

self.built = True

def compute_mask(self, inputs, mask=None):

# Just pass the received mask from previous layer, to the next layer or

# manipulate it if this layer changes the shape of the input

return mask

def call(self, inputs, sequence_lengths=None, training=None, **kwargs):

sequences = tf.convert_to_tensor(inputs, dtype=self.dtype)

if sequence_lengths is not None:

assert len(sequence_lengths.shape) == 2

assert tf.convert_to_tensor(sequence_lengths).dtype == 'int32'

seq_len_shape = tf.convert_to_tensor(sequence_lengths).get_shape().as_list()

assert seq_len_shape[1] == 1

self.sequence_lengths = K.flatten(sequence_lengths)

else:

self.sequence_lengths = tf.ones(tf.shape(inputs)[0], dtype=tf.int32) * (

tf.shape(inputs)[1]

)

viterbi_sequence, _ = crf_decode(sequences,

self.transitions,

self.sequence_lengths)

output = K.one_hot(viterbi_sequence, self.output_dim)

return K.in_train_phase(sequences, output)

@property

def loss(self):

def crf_loss(y_true, y_pred):

y_pred = tf.convert_to_tensor(y_pred, dtype=self.dtype)

log_likelihood, self.transitions = crf_log_likelihood(

y_pred,

tf.cast(K.argmax(y_true), dtype=tf.int32) if self.sparse_target else y_true,

self.sequence_lengths,

transition_params=self.transitions,

)

return tf.reduce_mean(-log_likelihood)

return crf_loss

@property

def accuracy(self):

def viterbi_accuracy(y_true, y_pred):

# -1e10 to avoid zero at sum(mask)

mask = K.cast(

K.all(K.greater(y_pred, -1e10), axis=2), K.floatx())

shape = tf.shape(y_pred)

sequence_lengths = tf.ones(shape[0], dtype=tf.int32) * (shape[1])

y_pred, _ = crf_decode(y_pred, self.transitions, sequence_lengths)

if self.sparse_target:

y_true = K.argmax(y_true, 2)

y_pred = K.cast(y_pred, 'int32')

y_true = K.cast(y_true, 'int32')

corrects = K.cast(K.equal(y_true, y_pred), K.floatx())

return K.sum(corrects * mask) / K.sum(mask)

return viterbi_accuracy

def compute_output_shape(self, input_shape):

tf.TensorShape(input_shape).assert_has_rank(3)

return input_shape[:2] + (self.output_dim,)

def get_config(self):

config = {

'output_dim': self.output_dim,

'sparse_target': self.sparse_target,

'supports_masking': self.supports_masking,

'transitions': K.eval(self.transitions)

}

base_config = super(CRF, self).get_config()

return dict(base_config, **config)

預處理

import numpy as np

import tensorflow as tf

def build_data():

"""

獲取數據

:return: 返回數據(詞, 標簽) / 所有詞匯總的字典

"""

# 存放數據

datas = []

# 存放x

sample_x = []

# 存放y

sample_y = []

# 存放詞

vocabs = {'UNK'}

# 遍歷

for line in open("data/train.txt", encoding="utf-8"):

# 拆分

line = line.rstrip().split('\t')

# 取出字符

char = line[0]

# 如果字符為空, 跳過

if not char:

continue

# 取出字符對應標簽

cate = line[-1]

# append

sample_x.append(char)

sample_y.append(cate)

vocabs.add(char)

# 遇到標點代表句子結束

if char in ['。', '?', '!', '!', '?']:

datas.append([sample_x, sample_y])

# 清空

sample_x = []

sample_y = []

# set轉換為字典存儲出現過的字

word_dict = {wd: index for index, wd in enumerate(list(vocabs))}

print("vocab_size:", len(word_dict))

return datas, word_dict

def modify_data():

# 獲取數據

datas, word_dict = build_data()

X, y = zip(*datas)

print(X[:5])

print(y[:5])

# tokenizer

tokenizer = tf.keras.preprocessing.text.Tokenizer()

tokenizer.fit_on_texts(word_dict)

X_train = tokenizer.texts_to_sequences(X)

# 填充

X_train = tf.keras.preprocessing.sequence.pad_sequences(X_train, 150)

print(X_train[:5])

class_dict = {

'O': 0,

'TREATMENT-I': 1,

'TREATMENT-B': 2,

'BODY-B': 3,

'BODY-I': 4,

'SIGNS-I': 5,

'SIGNS-B': 6,

'CHECK-B': 7,

'CHECK-I': 8,

'DISEASE-I': 9,

'DISEASE-B': 10

}

# tokenize

X_train = [[word_dict[char] for char in data[0]] for data in datas]

y_train = [[class_dict[label] for label in data[1]] for data in datas]

print(X_train[:5])

print(y_train[:5])

# padding

X_train = tf.keras.preprocessing.sequence.pad_sequences(X_train, 150)

y_train = tf.keras.preprocessing.sequence.pad_sequences(y_train, 150)

y_train = np.expand_dims(y_train, 2)

# ndarray

X_train = np.asarray(X_train)

y_train = np.asarray(y_train)

print(X_train.shape)

print(y_train.shape)

return X_train, y_train

if __name__ == '__main__':

modify_data()

主程序

import tensorflow as tf

from pre_processing import modify_data

from crf import CRF

# 超參數

EPOCHS = 10 # 迭代次數

BATCH_SIZE = 64 # 單詞訓練樣本數目

learning_rate = 0.00003 # 學習率

VOCAB_SIZE = 1759 + 1

optimizer = tf.keras.optimizers.Adam(learning_rate=learning_rate) # 優化器

loss = tf.keras.losses.CategoricalCrossentropy() # 損失

def main():

# 獲取數據

X_train, y_train = modify_data()

model = tf.keras.Sequential([

tf.keras.layers.Embedding(VOCAB_SIZE, 300),

tf.keras.layers.Bidirectional(tf.keras.layers.LSTM(128, dropout=0.5, recurrent_dropout=0.5, return_sequences=True)),

tf.keras.layers.Bidirectional(tf.keras.layers.LSTM(64, dropout=0.5, recurrent_dropout=0.5, return_sequences=True)),

tf.keras.layers.TimeDistributed(tf.keras.layers.Dense(1)),

CRF(1, sparse_target=True)

])

# 組合

model.compile(optimizer=optimizer, loss=loss, metrics=["accuracy"])

# summery

model.build([None, 150])

print(model.summary())

# 保存

checkpoint = tf.keras.callbacks.ModelCheckpoint(

"../model/model.h5", monitor='val_loss',

verbose=1, save_best_only=True, mode='min',

save_weights_only=True

)

# 訓練

model.fit(X_train, y_train, validation_split=0.2, epochs=EPOCHS, batch_size=BATCH_SIZE, callbacks=[checkpoint])

if __name__ == '__main__':

main()

輸出結果:

vocab_size: 1759

(['≠≠,', '男', ',', '雙', '塔', '山', '人', ',', '主', '因', '咳', '嗽', '、', '少', '痰', '1', '個', '月', ',', '加', '重', '3', '天', ',', '抽', '搐', '1', '次', '于', '2', '0', '1', '6', '年', '1', '2', '月', '0', '8', '日', '0', '7', ':', '0', '0', '以', '1', '、', '肺', '炎', '2', '、', '抽', '搐', '待', '查', '收', '入', '院', '。'], ['性', '疼', '痛', '1', '年', '收', '入', '院', '。'], [',', '男', ',', '4', '歲', ',', '河', '北', '省', '承', '德', '市', '雙', '灤', '區', '陳', '柵', '子', '鄉', '陳', '柵', '子', '村', '人', ',', '主', '因', '"', '咳', '嗽', '、', '咳', '痰', ',', '伴', '發', '熱', '6', '天', '"', '于', '2', '0', '1', '6', '年', '1', '2', '月', '1', '3', '日', '1', '1', ':', '4', '7', '以', '支', '氣', '管', '肺', '炎', '收', '入', '院', '。'], ['2', '年', '膀', '胱', '造', '瘺', '口', '出', '尿', '1', '年', '于', '2', '0', '1', '7', '-', '-', '0', '2', '-', '-', '0', '6', '收', '入', '院', '。'], [';', 'n', 'b', 's', 'p', ';', '郎', '鴻', '雁', '女', '5', '9', '歲', '已', '婚', ' ', '漢', '族', ' ', '河', '北', '承', '德', '雙', '灤', '區', '人', ',', '現', '住', '電', '廠', '家', '屬', '院', ',', '主', '因', '肩', '頸', '部', '疼', '痛', '1', '0', '余', '年', ',', '加', '重', '2', '個', '月', '于', '2', '0', '1', '6', '-', '0', '1', '-', '1', '8', ' ', '9', ':', '1', '9', '收', '入', '院', '。'])

(['O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'SIGNS-B', 'SIGNS-I', 'O', 'SIGNS-B', 'SIGNS-I', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'SIGNS-B', 'SIGNS-I', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'DISEASE-B', 'DISEASE-I', 'O', 'O', 'SIGNS-B', 'SIGNS-I', 'O', 'O', 'O', 'O', 'O', 'O'], ['O', 'SIGNS-B', 'SIGNS-I', 'O', 'O', 'O', 'O', 'O', 'O'], ['O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'SIGNS-B', 'SIGNS-I', 'O', 'SIGNS-B', 'SIGNS-I', 'O', 'O', 'SIGNS-B', 'SIGNS-I', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'DISEASE-B', 'DISEASE-I', 'DISEASE-I', 'DISEASE-I', 'DISEASE-I', 'O', 'O', 'O', 'O'], ['O', 'O', 'BODY-B', 'BODY-I', 'BODY-I', 'BODY-I', 'BODY-I', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O'], ['O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'BODY-B', 'BODY-I', 'BODY-I', 'SIGNS-B', 'SIGNS-I', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O', 'O'])

[[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 880 1182 602 698 1530 1630 1457

602 31 878 1388 124 1211 225 346 456 267 1430 602 542 677

796 272 602 238 1251 456 1170 1268 577 46 456 1056 1641 456

577 1430 46 699 853 46 1231 46 46 1152 456 1211 797 1323

577 1211 238 1251 591 1364 1133 513 282 1232]

[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 1514 1259 709 456 1641 1133 513 282 1232]

[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 602 1182 602 1090 959 602 1155 1708 882 426 1426 1561

698 1242 908 174 1445 1334 229 174 1445 1334 1199 1457 602 31

878 1388 124 1211 1388 346 602 216 767 371 1056 272 1268 577

46 456 1056 1641 456 577 1430 456 796 853 456 456 1090 1231

1152 1455 669 1322 797 1323 1133 513 282 1232]

[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

577 1641 1584 734 1643 1126 186 896 967 456 1641 1268 577 46

456 1231 46 577 46 1056 1133 513 282 1232]

[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 1398 7 14 16 103 290 1491 1483 1024 1531 959 1081 559

845 114 1155 1708 426 1426 698 1242 908 1457 602 583 188 1575

1379 1337 326 282 602 31 878 1439 885 1520 1259 709 456 46

1625 1641 602 542 677 577 267 1430 1268 577 46 456 1056 46

456 456 699 1531 456 1531 1133 513 282 1232]]

[[891, 1203, 604, 702, 1562, 1665, 1486, 604, 11, 889, 1413, 110, 1233, 213, 337, 453, 255, 1457, 604, 542, 681, 803, 260, 604, 226, 1275, 453, 1190, 1292, 579, 26, 453, 1072, 1676, 453, 579, 1457, 26, 703, 864, 26, 1255, 1465, 26, 26, 1172, 453, 1233, 804, 1347, 579, 1233, 226, 1275, 593, 1388, 1153, 512, 270, 1256], [1546, 1283, 713, 453, 1676, 1153, 512, 270, 1256], [604, 1203, 604, 1108, 971, 604, 1175, 1745, 893, 421, 1451, 1594, 702, 1266, 919, 160, 1473, 1358, 217, 160, 1473, 1358, 1221, 1486, 604, 11, 889, 1127, 1413, 110, 1233, 1413, 337, 604, 204, 772, 362, 1072, 260, 1127, 1292, 579, 26, 453, 1072, 1676, 453, 579, 1457, 453, 803, 864, 453, 453, 1465, 1108, 1255, 1172, 1484, 673, 1346, 804, 1347, 1153, 512, 270, 1256], [579, 1676, 1618, 738, 1678, 1145, 173, 907, 979, 453, 1676, 1292, 579, 26, 453, 1255, 1495, 1495, 26, 579, 1495, 1495, 26, 1072, 1153, 512, 270, 1256], [369, 1423, 811, 1730, 986, 369, 88, 278, 1522, 1514, 1039, 1563, 971, 1099, 560, 1234, 855, 100, 1234, 1175, 1745, 421, 1451, 702, 1266, 919, 1486, 604, 585, 175, 1609, 1403, 1361, 317, 270, 604, 11, 889, 1467, 896, 1552, 1283, 713, 453, 26, 1660, 1676, 604, 542, 681, 579, 255, 1457, 1292, 579, 26, 453, 1072, 1495, 26, 453, 1495, 453, 703, 1234, 1563, 1465, 453, 1563, 1153, 512, 270, 1256]]

[[0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 6, 5, 0, 6, 5, 0, 0, 0, 0, 0, 0, 0, 0, 0, 6, 5, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 10, 9, 0, 0, 6, 5, 0, 0, 0, 0, 0, 0], [0, 6, 5, 0, 0, 0, 0, 0, 0], [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 6, 5, 0, 6, 5, 0, 0, 6, 5, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 10, 9, 9, 9, 9, 0, 0, 0, 0], [0, 0, 3, 4, 4, 4, 4, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0], [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 3, 4, 4, 6, 5, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]]

(7836, 150)

(7836, 150, 1)

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

embedding (Embedding) (None, None, 300) 528000

_________________________________________________________________

bidirectional (Bidirectional (None, None, 256) 439296

_________________________________________________________________

bidirectional_1 (Bidirection (None, None, 128) 164352

_________________________________________________________________

time_distributed (TimeDistri (None, None, 1) 129

_________________________________________________________________

crf (CRF) (None, None, 1) 1

=================================================================

Total params: 1,131,778

Trainable params: 1,131,778

Non-trainable params: 0

_________________________________________________________________

None

2021-11-23 00:31:29.846318: I tensorflow/compiler/mlir/mlir_graph_optimization_pass.cc:116] None of the MLIR optimization passes are enabled (registered 2)

Epoch 1/10

10/98 [==>...........................] - ETA: 7:52 - loss: 5.2686e-08 - accuracy: 0.9232

到此這篇關于Python機器學習NLP自然語言處理基本操作之命名實例提取的文章就介紹到這了,更多相關Python 命名實例提取內容請搜索服務器之家以前的文章或繼續瀏覽下面的相關文章希望大家以后多多支持服務器之家!

原文鏈接:https://blog.csdn.net/weixin_46274168/article/details/121484835