Spark SerializedLambda錯誤

在IDEA下開發Spark程序會遇到Lambda異常,下面演示異常及解決方案。

例子

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

|

import org.apache.spark.SparkConf;import org.apache.spark.api.java.JavaRDD;import org.apache.spark.api.java.JavaSparkContext;import org.apache.spark.api.java.function.Function;public class SimpleApp { public static void main(String[] args) { String logFile = "/soft/dounine/github/spark-learn/README.md"; // Should be some file on your system SparkConf sparkConf = new SparkConf() .setMaster("spark://localhost:7077") .setAppName("Demo"); JavaSparkContext sc = new JavaSparkContext(sparkConf); JavaRDD<String> logData = sc.textFile(logFile).cache(); long numAs = logData.filter(s -> s.contains("a")).count(); long numBs = logData.map(new Function<String, Integer>() { @Override public Integer call(String v1) throws Exception { return 1; } }).reduce((a,b)->a+b); System.out.println("Lines with a: " + numAs + ", lines with b: " + numBs); sc.stop(); }} |

由于使用jdk1.8的lambda表達式,會有如下異常

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

|

18/08/06 15:18:41 WARN TaskSetManager: Lost task 0.0 in stage 0.0 (TID 0, 192.168.0.107, executor 0): java.lang.ClassCastException: cannot assign instance of java.lang.invoke.SerializedLambda to field org.apache.spark.api.java.JavaRDD$$anonfun$filter$1.f$1 of type org.apache.spark.api.java.function.Function in instance of org.apache.spark.api.java.JavaRDD$$anonfun$filter$1 at java.io.ObjectStreamClass$FieldReflector.setObjFieldValues(ObjectStreamClass.java:2233) at java.io.ObjectStreamClass.setObjFieldValues(ObjectStreamClass.java:1405) at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2290) at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2208) at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2066) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1570) at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2284) at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2208) at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2066) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1570) at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2284) at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2208) at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2066) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1570) at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2284) at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2208) at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2066) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1570) at java.io.ObjectInputStream.readObject(ObjectInputStream.java:430) at org.apache.spark.serializer.JavaDeserializationStream.readObject(JavaSerializer.scala:75) at org.apache.spark.serializer.JavaSerializerInstance.deserialize(JavaSerializer.scala:114) at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:80) at org.apache.spark.scheduler.Task.run(Task.scala:109) at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:345) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:748)18/08/06 15:18:41 INFO TaskSetManager: Lost task 1.0 in stage 0.0 (TID 1) on 192.168.0.107, executor 0: java.lang.ClassCastException (cannot assign instance of java.lang.invoke.SerializedLambda to field org.apache.spark.api.java.JavaRDD$$anonfun$filter$1.f$1 of type org.apache.spark.api.java.function.Function in instance of org.apache.spark.api.java.JavaRDD$$anonfun$filter$1) [duplicate 1]18/08/06 15:18:41 INFO TaskSetManager: Starting task 1.1 in stage 0.0 (TID 2, 192.168.0.107, executor 0, partition 1, PROCESS_LOCAL, 7898 bytes)18/08/06 15:18:41 INFO TaskSetManager: Starting task 0.1 in stage 0.0 (TID 3, 192.168.0.107, executor 0, partition 0, PROCESS_LOCAL, 7898 bytes)18/08/06 15:18:41 INFO TaskSetManager: Lost task 1.1 in stage 0.0 (TID 2) on 192.168.0.107, executor 0: java.lang.ClassCastException (cannot assign instance of java.lang.invoke.SerializedLambda to field org.apache.spark.api.java.JavaRDD$$anonfun$filter$1.f$1 of type org.apache.spark.api.java.function.Function in instance of org.apache.spark.api.java.JavaRDD$$anonfun$filter$1) [duplicate 2]18/08/06 15:18:41 INFO TaskSetManager: Starting task 1.2 in stage 0.0 (TID 4, 192.168.0.107, executor 0, partition 1, PROCESS_LOCAL, 7898 bytes)18/08/06 15:18:41 INFO TaskSetManager: Lost task 0.1 in stage 0.0 (TID 3) on 192.168.0.107, executor 0: java.lang.ClassCastException (cannot assign instance of java.lang.invoke.SerializedLambda to field org.apache.spark.api.java.JavaRDD$$anonfun$filter$1.f$1 of type org.apache.spark.api.java.function.Function in instance of org.apache.spark.api.java.JavaRDD$$anonfun$filter$1) [duplicate 3]18/08/06 15:18:41 INFO TaskSetManager: Starting task 0.2 in stage 0.0 (TID 5, 192.168.0.107, executor 0, partition 0, PROCESS_LOCAL, 7898 bytes)18/08/06 15:18:41 INFO TaskSetManager: Lost task 0.2 in stage 0.0 (TID 5) on 192.168.0.107, executor 0: java.lang.ClassCastException (cannot assign instance of java.lang.invoke.SerializedLambda to field org.apache.spark.api.java.JavaRDD$$anonfun$filter$1.f$1 of type org.apache.spark.api.java.function.Function in instance of org.apache.spark.api.java.JavaRDD$$anonfun$filter$1) [duplicate 4]18/08/06 15:18:41 INFO TaskSetManager: Starting task 0.3 in stage 0.0 (TID 6, 192.168.0.107, executor 0, partition 0, PROCESS_LOCAL, 7898 bytes)18/08/06 15:18:41 INFO TaskSetManager: Lost task 1.2 in stage 0.0 (TID 4) on 192.168.0.107, executor 0: java.lang.ClassCastException (cannot assign instance of java.lang.invoke.SerializedLambda to field org.apache.spark.api.java.JavaRDD$$anonfun$filter$1.f$1 of type org.apache.spark.api.java.function.Function in instance of org.apache.spark.api.java.JavaRDD$$anonfun$filter$1) [duplicate 5]18/08/06 15:18:41 INFO TaskSetManager: Starting task 1.3 in stage 0.0 (TID 7, 192.168.0.107, executor 0, partition 1, PROCESS_LOCAL, 7898 bytes)18/08/06 15:18:41 INFO TaskSetManager: Lost task 0.3 in stage 0.0 (TID 6) on 192.168.0.107, executor 0: java.lang.ClassCastException (cannot assign instance of java.lang.invoke.SerializedLambda to field org.apache.spark.api.java.JavaRDD$$anonfun$filter$1.f$1 of type org.apache.spark.api.java.function.Function in instance of org.apache.spark.api.java.JavaRDD$$anonfun$filter$1) [duplicate 6]18/08/06 15:18:41 ERROR TaskSetManager: Task 0 in stage 0.0 failed 4 times; aborting job18/08/06 15:18:41 INFO TaskSetManager: Lost task 1.3 in stage 0.0 (TID 7) on 192.168.0.107, executor 0: java.lang.ClassCastException (cannot assign instance of java.lang.invoke.SerializedLambda to field org.apache.spark.api.java.JavaRDD$$anonfun$filter$1.f$1 of type org.apache.spark.api.java.function.Function in instance of org.apache.spark.api.java.JavaRDD$$anonfun$filter$1) [duplicate 7]18/08/06 15:18:41 INFO TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool 18/08/06 15:18:41 INFO TaskSchedulerImpl: Cancelling stage 018/08/06 15:18:41 INFO DAGScheduler: ResultStage 0 (count at SimpleApp.java:19) failed in 1.113 s due to Job aborted due to stage failure: Task 0 in stage 0.0 failed 4 times, most recent failure: Lost task 0.3 in stage 0.0 (TID 6, 192.168.0.107, executor 0): java.lang.ClassCastException: cannot assign instance of java.lang.invoke.SerializedLambda to field org.apache.spark.api.java.JavaRDD$$anonfun$filter$1.f$1 of type org.apache.spark.api.java.function.Function in instance of org.apache.spark.api.java.JavaRDD$$anonfun$filter$1 at java.io.ObjectStreamClass$FieldReflector.setObjFieldValues(ObjectStreamClass.java:2233) at java.io.ObjectStreamClass.setObjFieldValues(ObjectStreamClass.java:1405) at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2290) at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2208) at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2066) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1570) at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2284) at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2208) at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2066) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1570) at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2284) at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2208) at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2066) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1570) at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2284) at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2208) at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2066) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1570) at java.io.ObjectInputStream.readObject(ObjectInputStream.java:430) at org.apache.spark.serializer.JavaDeserializationStream.readObject(JavaSerializer.scala:75) at org.apache.spark.serializer.JavaSerializerInstance.deserialize(JavaSerializer.scala:114) at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:80) at org.apache.spark.scheduler.Task.run(Task.scala:109) at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:345) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:748)Driver stacktrace:18/08/06 15:18:41 INFO DAGScheduler: Job 0 failed: count at SimpleApp.java:19, took 1.138497 sException in thread "main" org.apache.spark.SparkException: Job aborted due to stage failure: Task 0 in stage 0.0 failed 4 times, most recent failure: Lost task 0.3 in stage 0.0 (TID 6, 192.168.0.107, executor 0): java.lang.ClassCastException: cannot assign instance of java.lang.invoke.SerializedLambda to field org.apache.spark.api.java.JavaRDD$$anonfun$filter$1.f$1 of type org.apache.spark.api.java.function.Function in instance of org.apache.spark.api.java.JavaRDD$$anonfun$filter$1 at java.io.ObjectStreamClass$FieldReflector.setObjFieldValues(ObjectStreamClass.java:2233) at java.io.ObjectStreamClass.setObjFieldValues(ObjectStreamClass.java:1405) at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2290) at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2208) at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2066) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1570) at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2284) at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2208) at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2066) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1570) at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2284) at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2208) at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2066) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1570) at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2284) at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2208) at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2066) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1570) at java.io.ObjectInputStream.readObject(ObjectInputStream.java:430) at org.apache.spark.serializer.JavaDeserializationStream.readObject(JavaSerializer.scala:75) at org.apache.spark.serializer.JavaSerializerInstance.deserialize(JavaSerializer.scala:114) at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:80) at org.apache.spark.scheduler.Task.run(Task.scala:109) at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:345) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:748)Driver stacktrace: at org.apache.spark.scheduler.DAGScheduler.org$apache$spark$scheduler$DAGScheduler$$failJobAndIndependentStages(DAGScheduler.scala:1602) at org.apache.spark.scheduler.DAGScheduler$$anonfun$abortStage$1.apply(DAGScheduler.scala:1590) at org.apache.spark.scheduler.DAGScheduler$$anonfun$abortStage$1.apply(DAGScheduler.scala:1589) at scala.collection.mutable.ResizableArray$class.foreach(ResizableArray.scala:59) at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:48) at org.apache.spark.scheduler.DAGScheduler.abortStage(DAGScheduler.scala:1589) at org.apache.spark.scheduler.DAGScheduler$$anonfun$handleTaskSetFailed$1.apply(DAGScheduler.scala:831) at org.apache.spark.scheduler.DAGScheduler$$anonfun$handleTaskSetFailed$1.apply(DAGScheduler.scala:831) at scala.Option.foreach(Option.scala:257) at org.apache.spark.scheduler.DAGScheduler.handleTaskSetFailed(DAGScheduler.scala:831) at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.doOnReceive(DAGScheduler.scala:1823) at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:1772) at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:1761) at org.apache.spark.util.EventLoop$$anon$1.run(EventLoop.scala:48) at org.apache.spark.scheduler.DAGScheduler.runJob(DAGScheduler.scala:642) at org.apache.spark.SparkContext.runJob(SparkContext.scala:2034) at org.apache.spark.SparkContext.runJob(SparkContext.scala:2055) at org.apache.spark.SparkContext.runJob(SparkContext.scala:2074) at org.apache.spark.SparkContext.runJob(SparkContext.scala:2099) at org.apache.spark.rdd.RDD.count(RDD.scala:1162) at org.apache.spark.api.java.JavaRDDLike$class.count(JavaRDDLike.scala:455) at org.apache.spark.api.java.AbstractJavaRDDLike.count(JavaRDDLike.scala:45) at com.dounine.spark.learn.SimpleApp.main(SimpleApp.java:19)Caused by: java.lang.ClassCastException: cannot assign instance of java.lang.invoke.SerializedLambda to field org.apache.spark.api.java.JavaRDD$$anonfun$filter$1.f$1 of type org.apache.spark.api.java.function.Function in instance of org.apache.spark.api.java.JavaRDD$$anonfun$filter$1 at java.io.ObjectStreamClass$FieldReflector.setObjFieldValues(ObjectStreamClass.java:2233) at java.io.ObjectStreamClass.setObjFieldValues(ObjectStreamClass.java:1405) at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2290) at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2208) at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2066) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1570) at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2284) at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2208) at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2066) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1570) at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2284) at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2208) at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2066) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1570) at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2284) at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2208) at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2066) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1570) at java.io.ObjectInputStream.readObject(ObjectInputStream.java:430) at org.apache.spark.serializer.JavaDeserializationStream.readObject(JavaSerializer.scala:75) at org.apache.spark.serializer.JavaSerializerInstance.deserialize(JavaSerializer.scala:114) at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:80) at org.apache.spark.scheduler.Task.run(Task.scala:109) at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:345) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:748)18/08/06 15:18:41 INFO SparkContext: Invoking stop() from shutdown hook18/08/06 15:18:41 INFO SparkUI: Stopped Spark web UI at http://lake.dounine.com:404018/08/06 15:18:41 INFO StandaloneSchedulerBackend: Shutting down all executors18/08/06 15:18:41 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Asking each executor to shut down18/08/06 15:18:41 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!18/08/06 15:18:41 INFO MemoryStore: MemoryStore cleared18/08/06 15:18:41 INFO BlockManager: BlockManager stopped18/08/06 15:18:41 INFO BlockManagerMaster: BlockManagerMaster stopped18/08/06 15:18:41 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!18/08/06 15:18:41 INFO SparkContext: Successfully stopped SparkContext18/08/06 15:18:41 INFO ShutdownHookManager: Shutdown hook called18/08/06 15:18:41 INFO ShutdownHookManager: Deleting directory /tmp/spark-cf16df6e-fd04-4d17-8b6a-a6252793d0d5 |

是因為jar包沒有分發到Worker中。

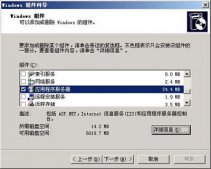

解決方案(一)

添加Jar包位置路徑

|

1

2

3

4

|

SparkConf sparkConf = new SparkConf() .setMaster("spark://lake.dounine.com:7077") .setJars(new String[]{"/soft/dounine/github/spark-learn/build/libs/spark-learn-1.0-SNAPSHOT.jar"}) .setAppName("Demo"); |

解決方案(二)

使用本地開發模式

|

1

2

3

|

SparkConf sparkConf = new SparkConf() .setMaster("local") .setAppName("Demo"); |

執行spark報錯EOFException Kryo和SerializedLambda

執行spark報錯EOFException Kryo和SerializedLambda問題的解決辦法

EOFException Kryo問題的解決

發布到spark的worker工作機的項目依賴庫中刪除底版本的kryo文件,如下:

在執行環境中刪除kryo-2.21.jar文件和保留kryo-shaded-3.0.3.jar文件,執行就OK了。

經過查看在kryo-shaded-3.0.3.jar和geowave-tools-0.9.8-apache.jar文件中都有一個類存在,這個類是com.esofericsoftwave.kryo.io.UnSafeOutput.class,大小為7066, 然而kryo-2.21.jar確沒有這個類。

具體報錯信息為:特別在執行javaRDD.count()和javaRDD.maptoPair()方法時報錯

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

java.io.EOFExceptionat org.apache.spark.serializer.KryoDeserializationStream.readObject(KryoSerializer.scala:283)at org.apache.spark.broadcast.TorrentBroadcast$$anonfun$8.apply(TorrentBroadcast.scala:308)at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1380)at org.apache.spark.broadcast.TorrentBroadcast$.unBlockifyObject(TorrentBroadcast.scala:309)at org.apache.spark.broadcast.TorrentBroadcast$$anonfun$readBroadcastBlock$1$$anonfun$apply$2.apply(TorrentBroadcast.scala:235)at scala.Option.getOrElse(Option.scala:121)at org.apache.spark.broadcast.TorrentBroadcast$$anonfun$readBroadcastBlock$1.apply(TorrentBroadcast.scala:211)at org.apache.spark.util.Utils$.tryOrIOException(Utils.scala:1346)at org.apache.spark.broadcast.TorrentBroadcast.readBroadcastBlock(TorrentBroadcast.scala:207)at org.apache.spark.broadcast.TorrentBroadcast._value$lzycompute(TorrentBroadcast.scala:66)at org.apache.spark.broadcast.TorrentBroadcast._value(TorrentBroadcast.scala:66)at org.apache.spark.broadcast.TorrentBroadcast.getValue(TorrentBroadcast.scala:96)at org.apache.spark.broadcast.Broadcast.value(Broadcast.scala:70)at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:81)at org.apache.spark.scheduler.Task.run(Task.scala:109)at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:345)at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)at java.lang.Thread.run(Thread.java:748) |

cannot assign instance SerializedLambda 報錯問題的解決

cannot assign instance of java.lang.invoke.SerializedLambda to field

在代碼添加一行:

|

1

|

conf.setJars(JavaSparkContext.jarOfClass(this.getClass())); |

運行就完全OK了

具體報錯信息如下:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

|

java.lang.ClassCastException: cannot assign instance of java.lang.invoke.SerializedLambda to field org.apache.spark.api.java.JavaPairRDD$$anonfun$pairFunToScalaFun$1.x$334 of type org.apache.spark.api.java.function.PairFunction in instance of org.apache.spark.api.java.JavaPairRDD$$anonfun$pairFunToScalaFun$1 at java.io.ObjectStreamClass$FieldReflector.setObjFieldValues(ObjectStreamClass.java:2233) at java.io.ObjectStreamClass.setObjFieldValues(ObjectStreamClass.java:1405) at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2291) at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2209) at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2067) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1571) at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2285) at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2209) at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2067) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1571) at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2285) at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2209) at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2067) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1571) at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2285) at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2209) at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2067) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1571) at java.io.ObjectInputStream.readObject(ObjectInputStream.java:431) at org.apache.spark.serializer.JavaDeserializationStream.readObject(JavaSerializer.scala:75) at org.apache.spark.serializer.JavaSerializerInstance.deserialize(JavaSerializer.scala:114) at org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:85) at org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:53) at org.apache.spark.scheduler.Task.run(Task.scala:109) at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:345) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) ... 1 more |

以上為個人經驗,希望能給大家一個參考,也希望大家多多支持服務器之家。

原文鏈接:https://blog.csdn.net/dounine/article/details/81637781