語音獲取

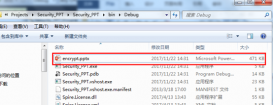

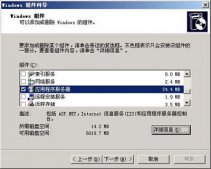

要想發送語音信息,首先得獲取語音,這里有幾種方法,一種是使用directx的directxsound來錄音,我為了簡便使用一個開源的插件naudio來實現語音錄取。 在項目中引用naudio.dll

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

|

//------------------錄音相關----------------------------- private iwavein wavein; private wavefilewriter writer; private void loadwasapidevicescombo() { var deviceenum = new mmdeviceenumerator(); var devices = deviceenum.enumerateaudioendpoints(dataflow.capture, devicestate.active).tolist(); combobox1.datasource = devices; combobox1.displaymember = "friendlyname"; } private void createwaveindevice() { wavein = new wavein(); wavein.waveformat = new waveformat(8000, 1); wavein.dataavailable += ondataavailable; wavein.recordingstopped += onrecordingstopped; } void ondataavailable(object sender, waveineventargs e) { if (this.invokerequired) { this.begininvoke(new eventhandler<waveineventargs>(ondataavailable), sender, e); } else { writer.write(e.buffer, 0, e.bytesrecorded); int secondsrecorded = (int)(writer.length / writer.waveformat.averagebytespersecond); if (secondsrecorded >= 10)//最大10s { stoprecord(); } else { l_sound.text = secondsrecorded + " s"; } } } void onrecordingstopped(object sender, stoppedeventargs e) { if (invokerequired) { begininvoke(new eventhandler<stoppedeventargs>(onrecordingstopped), sender, e); } else { finalizewavefile(); } } void stoprecord() { allchangebtn(btn_luyin, true); allchangebtn(btn_stop, false); allchangebtn(btn_sendsound, true); allchangebtn(btn_play, true); //btn_luyin.enabled = true; //btn_stop.enabled = false; //btn_sendsound.enabled = true; //btn_play.enabled = true; if (wavein != null) wavein.stoprecording(); //cleanup(); } private void cleanup() { if (wavein != null) { wavein.dispose(); wavein = null; } finalizewavefile(); } private void finalizewavefile() { if (writer != null) { writer.dispose(); writer = null; } } //開始錄音 private void btn_luyin_click(object sender, eventargs e) { btn_stop.enabled = true; btn_luyin.enabled = false; if (wavein == null) { createwaveindevice(); } if (file.exists(soundfile)) { file.delete(soundfile); } writer = new wavefilewriter(soundfile, wavein.waveformat); wavein.startrecording(); } |

上面的代碼實現了錄音,并且寫入文件p2psound_a.wav

語音發送

獲取到語音后我們要把語音發送出去

當我們錄好音后點擊發送,這部分相關代碼是

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

|

msgtranslator tran = null; ublic form1() { initializecomponent(); loadwasapidevicescombo();//顯示音頻設備 config cfg = seiclient.getdefaultconfig(); cfg.port = 7777; udpthread udp = new udpthread(cfg); tran = new msgtranslator(udp, cfg); tran.messagereceived += tran_messagereceived; tran.debuged += new eventhandler<debugeventargs>(tran_debuged); } private void btn_sendsound_click(object sender, eventargs e) { if (t_ip.text == "") { messagebox.show("請輸入ip"); return; } if (t_port.text == "") { messagebox.show("請輸入端口號"); return; } string ip = t_ip.text; int port = int.parse(t_port.text); string nick = t_nick.text; string msg = "語音消息"; ipendpoint remote = new ipendpoint(ipaddress.parse(ip), port); msg m = new msg(remote, "zz", nick, commands.sendmsg, msg, "come from a"); m.isrequirereceive = true; m.extendmessagebytes = filecontent(soundfile); m.packageno = msg.getrandomnumber(); m.type = consts.message_binary; tran.send(m); } private byte[] filecontent(string filename) { filestream fs = new filestream(filename, filemode.open, fileaccess.read); try { byte[] buffur = new byte[fs.length]; fs.read(buffur, 0, (int)fs.length); return buffur; } catch (exception ex) { return null; } finally { if (fs != null) { //關閉資源 fs.close(); } } } |

如此一來我們就把產生的語音文件發送出去了

語音的接收與播放

其實語音的接收和文本消息的接收沒有什么不同,只不過語音發送的時候是以二進制發送的,因此我們在收到語音后 就應該寫入到一個文件里面去,接收完成后,播放這段語音就行了。

下面這段代碼主要是把收到的數據保存到文件中去,這個函數式我的netframe里收到消息時所觸發的事件,在文章前面提過的那篇文章里

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

|

void tran_messagereceived(object sender, messageeventargs e) { msg msg = e.msg; if (msg.type == consts.message_binary) { string m = msg.type + "->" + msg.username + "發來二進制消息!"; addservermessage(m); if (file.exists(recive_soundfile)) { file.delete(recive_soundfile); } filestream fs = new filestream(recive_soundfile, filemode.create, fileaccess.write); fs.write(msg.extendmessagebytes, 0, msg.extendmessagebytes.length); fs.close(); //play_sound(recive_soundfile); changebtn(true); } else { string m = msg.type + "->" + msg.username + "說:" + msg.normalmsg; addservermessage(m); } } |

收到語音消息后,我們要進行播放,播放時仍然用剛才那個插件播放

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

|

//--------播放部分---------- private iwaveplayer waveplayer; private wavestream reader; public void play_sound(string filename) { if (waveplayer != null) { waveplayer.dispose(); waveplayer = null; } if (reader != null) { reader.dispose(); } reader = new mediafoundationreader(filename, new mediafoundationreader.mediafoundationreadersettings() { singlereaderobject = true }); if (waveplayer == null) { waveplayer = new waveout(); waveplayer.playbackstopped += waveplayeronplaybackstopped; waveplayer.init(reader); } waveplayer.play(); } private void waveplayeronplaybackstopped(object sender, stoppedeventargs stoppedeventargs) { if (stoppedeventargs.exception != null) { messagebox.show(stoppedeventargs.exception.message); } if (waveplayer != null) { waveplayer.stop(); } btn_luyin.enabled = true; }private void btn_play_click(object sender, eventargs e) { btn_luyin.enabled = false; play_sound(soundfile); } |

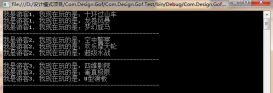

在上面演示了接收和發送一段語音消息的界面

技術總結

主要用到的技術就是udp和naudio的錄音和播放功能

希望這篇文章能夠給大家提供一個思路,幫助大家實現p2p語音聊天工具。