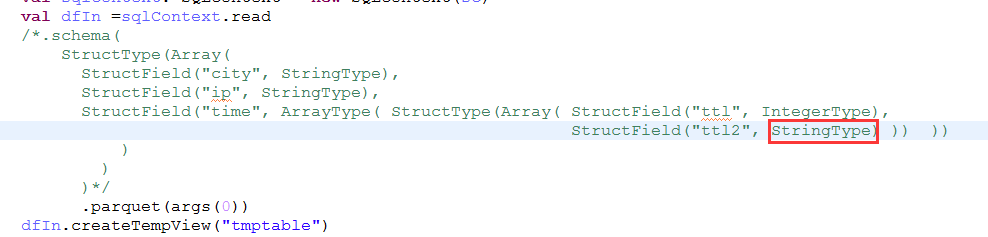

本文介紹了java 讀寫Parquet格式的數據,分享給大家,具體如下:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

|

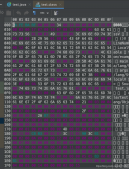

import java.io.BufferedReader;import java.io.File;import java.io.FileReader;import java.io.IOException;import java.util.Random;import org.apache.hadoop.conf.Configuration;import org.apache.hadoop.fs.Path;import org.apache.log4j.Logger;import org.apache.parquet.example.data.Group;import org.apache.parquet.example.data.GroupFactory;import org.apache.parquet.example.data.simple.SimpleGroupFactory;import org.apache.parquet.hadoop.ParquetReader;import org.apache.parquet.hadoop.ParquetReader.Builder;import org.apache.parquet.hadoop.ParquetWriter;import org.apache.parquet.hadoop.example.GroupReadSupport;import org.apache.parquet.hadoop.example.GroupWriteSupport;import org.apache.parquet.schema.MessageType;import org.apache.parquet.schema.MessageTypeParser;public class ReadParquet { static Logger logger=Logger.getLogger(ReadParquet.class); public static void main(String[] args) throws Exception { // parquetWriter("test\\parquet-out2","input.txt"); parquetReaderV2("test\\parquet-out2"); } static void parquetReaderV2(String inPath) throws Exception{ GroupReadSupport readSupport = new GroupReadSupport(); Builder<Group> reader= ParquetReader.builder(readSupport, new Path(inPath)); ParquetReader<Group> build=reader.build(); Group line=null; while((line=build.read())!=null){ Group time= line.getGroup("time", 0); //通過下標和字段名稱都可以獲取 /*System.out.println(line.getString(0, 0)+"\t"+ line.getString(1, 0)+"\t"+ time.getInteger(0, 0)+"\t"+ time.getString(1, 0)+"\t");*/ System.out.println(line.getString("city", 0)+"\t"+ line.getString("ip", 0)+"\t"+ time.getInteger("ttl", 0)+"\t"+ time.getString("ttl2", 0)+"\t"); //System.out.println(line.toString()); } System.out.println("讀取結束"); } //新版本中new ParquetReader()所有構造方法好像都棄用了,用上面的builder去構造對象 static void parquetReader(String inPath) throws Exception{ GroupReadSupport readSupport = new GroupReadSupport(); ParquetReader<Group> reader = new ParquetReader<Group>(new Path(inPath),readSupport); Group line=null; while((line=reader.read())!=null){ System.out.println(line.toString()); } System.out.println("讀取結束"); } /** * * @param outPath 輸出Parquet格式 * @param inPath 輸入普通文本文件 * @throws IOException */ static void parquetWriter(String outPath,String inPath) throws IOException{ MessageType schema = MessageTypeParser.parseMessageType("message Pair {\n" + " required binary city (UTF8);\n" + " required binary ip (UTF8);\n" + " repeated group time {\n"+ " required int32 ttl;\n"+ " required binary ttl2;\n"+ "}\n"+ "}"); GroupFactory factory = new SimpleGroupFactory(schema); Path path = new Path(outPath); Configuration configuration = new Configuration(); GroupWriteSupport writeSupport = new GroupWriteSupport(); writeSupport.setSchema(schema,configuration); ParquetWriter<Group> writer = new ParquetWriter<Group>(path,configuration,writeSupport); //把本地文件讀取進去,用來生成parquet格式文件 BufferedReader br =new BufferedReader(new FileReader(new File(inPath))); String line=""; Random r=new Random(); while((line=br.readLine())!=null){ String[] strs=line.split("\\s+"); if(strs.length==2) { Group group = factory.newGroup() .append("city",strs[0]) .append("ip",strs[1]); Group tmpG =group.addGroup("time"); tmpG.append("ttl", r.nextInt(9)+1); tmpG.append("ttl2", r.nextInt(9)+"_a"); writer.write(group); } } System.out.println("write end"); writer.close(); }} |

說下schema(寫Parquet格式數據需要schema,讀取的話"自動識別"了schema)

|

1

2

3

4

5

6

7

8

9

10

11

|

/* * 每一個字段有三個屬性:重復數、數據類型和字段名,重復數可以是以下三種: * required(出現1次) * repeated(出現0次或多次) * optional(出現0次或1次) * 每一個字段的數據類型可以分成兩種: * group(復雜類型) * primitive(基本類型) * 數據類型有 * INT64, INT32, BOOLEAN, BINARY, FLOAT, DOUBLE, INT96, FIXED_LEN_BYTE_ARRAY */ |

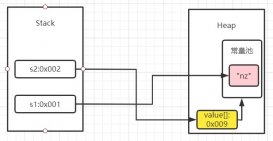

這個repeated和required 不光是次數上的區別,序列化后生成的數據類型也不同,比如repeqted修飾 ttl2 打印出來為 WrappedArray([7,7_a]) 而 required修飾 ttl2 打印出來為 [7,7_a] 除了用MessageTypeParser.parseMessageType類生成MessageType 還可以用下面方法

(注意這里有個坑--spark里會有這個問題--ttl2這里 as(OriginalType.UTF8) 和 required binary city (UTF8)作用一樣,加上UTF8,在讀取的時候可以轉為StringType,不加的話會報錯 [B cannot be cast to java.lang.String )

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

/*MessageType schema = MessageTypeParser.parseMessageType("message Pair {\n" + " required binary city (UTF8);\n" + " required binary ip (UTF8);\n" + "repeated group time {\n"+ "required int32 ttl;\n"+ "required binary ttl2;\n"+ "}\n"+ "}");*/ //import org.apache.parquet.schema.Types;MessageType schema = Types.buildMessage() .required(PrimitiveTypeName.BINARY).as(OriginalType.UTF8).named("city") .required(PrimitiveTypeName.BINARY).as(OriginalType.UTF8).named("ip") .repeatedGroup().required(PrimitiveTypeName.INT32).named("ttl") .required(PrimitiveTypeName.BINARY).as(OriginalType.UTF8).named("ttl2") .named("time") .named("Pair"); |

解決 [B cannot be cast to java.lang.String 異常:

1.要么生成parquet文件的時候加個UTF8

2.要么讀取的時候再提供一個同樣的schema類指定該字段類型,比如下面:

maven依賴(我用的1.7)

|

1

2

3

4

5

|

<dependency> <groupId>org.apache.parquet</groupId> <artifactId>parquet-hadoop</artifactId> <version>1.7.0</version></dependency> |

以上就是本文的全部內容,希望對大家的學習有所幫助,也希望大家多多支持服務器之家。

原文鏈接:http://www.cnblogs.com/yanghaolie/p/7156372.html